Gradle User Manual: Version 9.3.1

- RELEASES

- RUNTIME AND CONFIGURATION

- DSL AND APIS

- BEST PRACTICES

- CORE PLUGINS

- DEPENDENCY MANAGEMENT

- Dependency Management

- 1. Declaring dependencies

- 2. Dependency Configurations

- 3. Declaring repositories

- 4. Centralizing dependencies

- 5. Dependency Constraints and Conflict Resolution

- Dependency Resolution

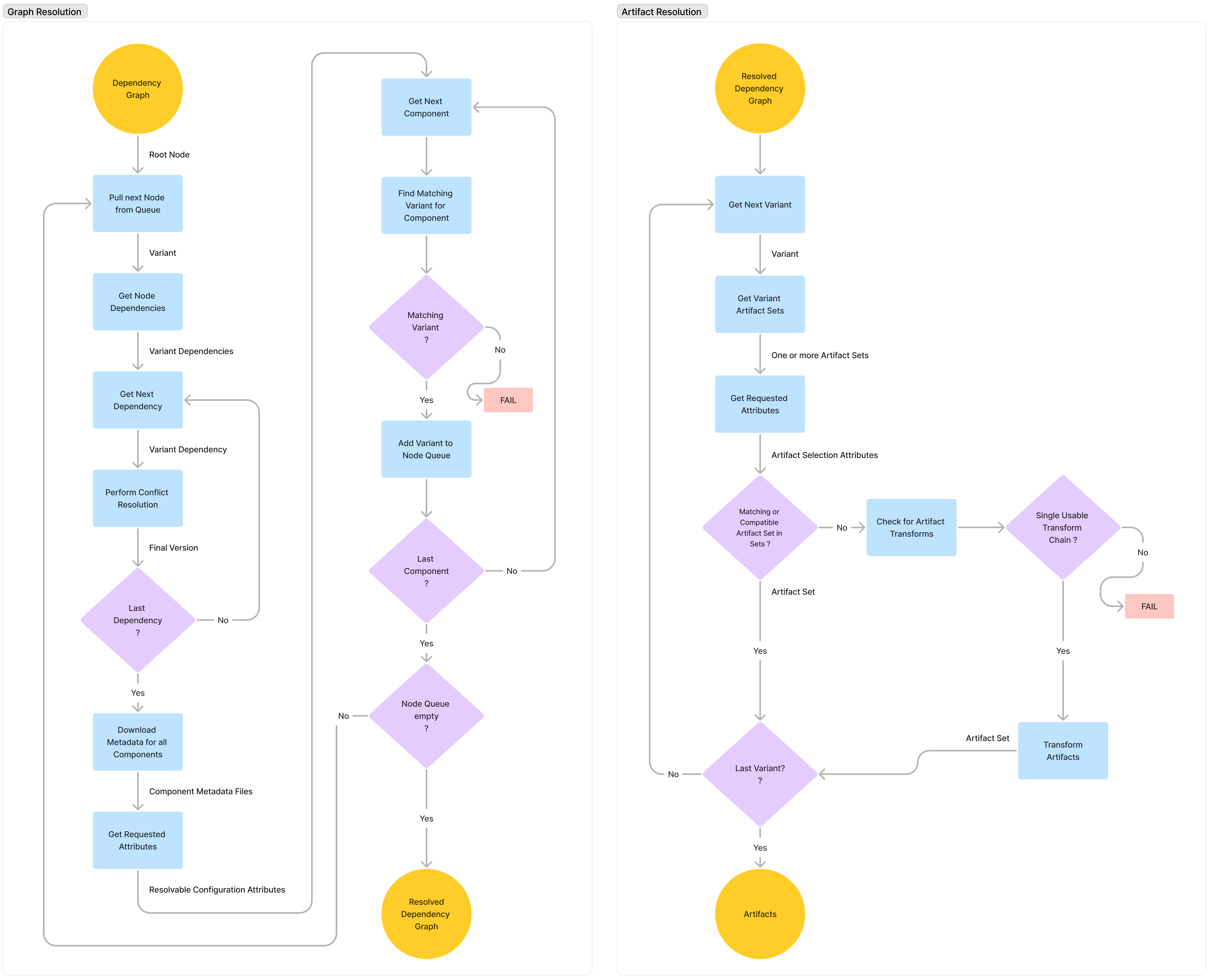

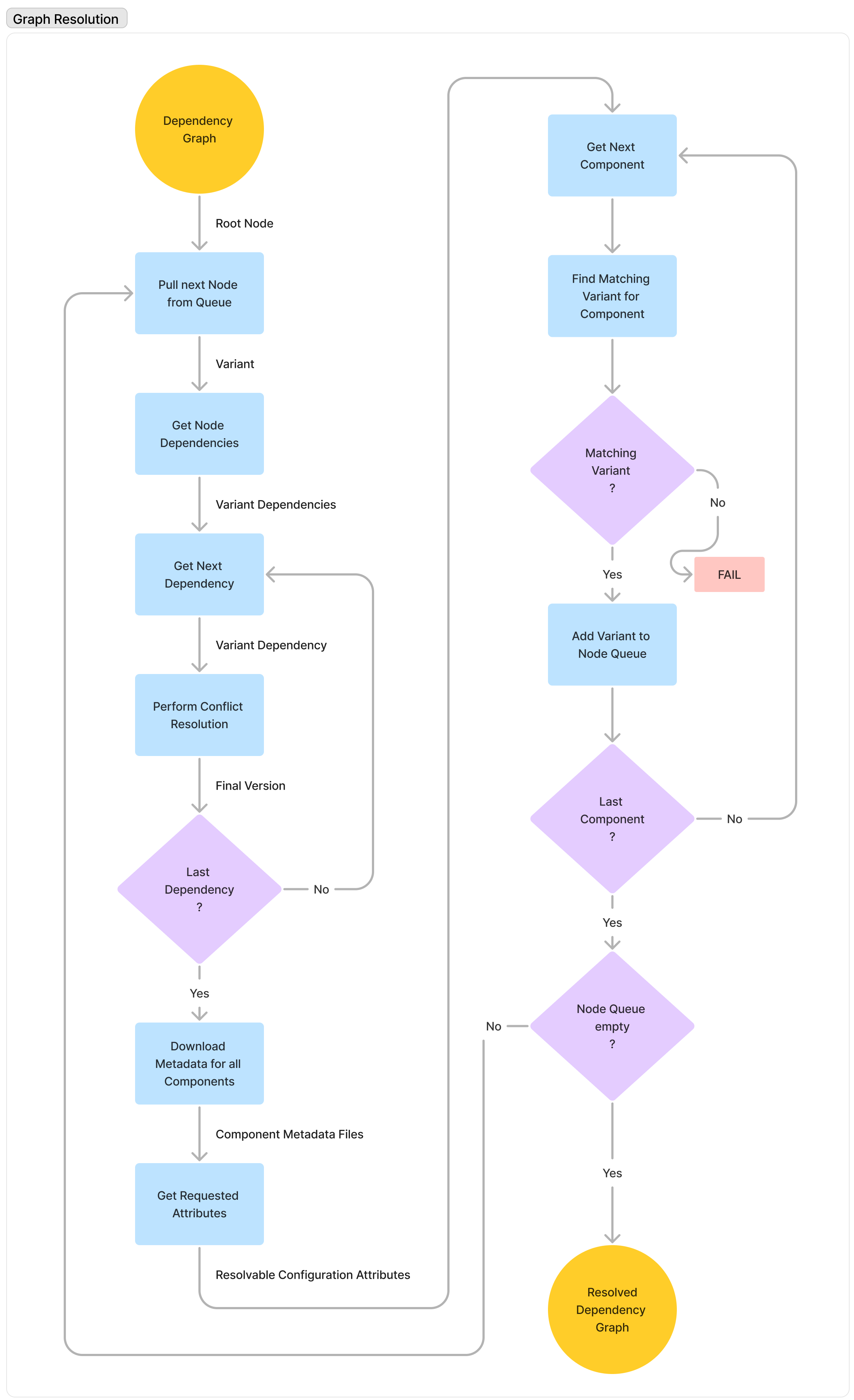

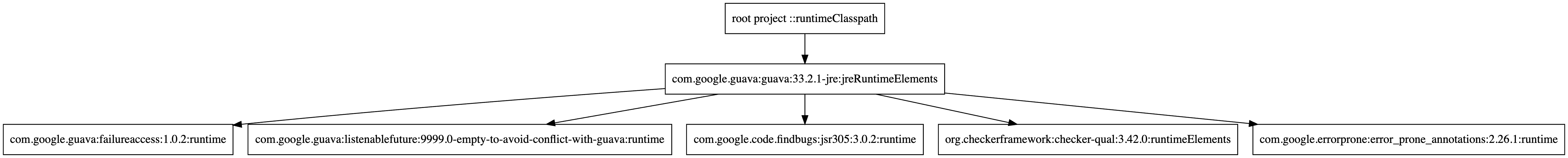

- Graph Resolution

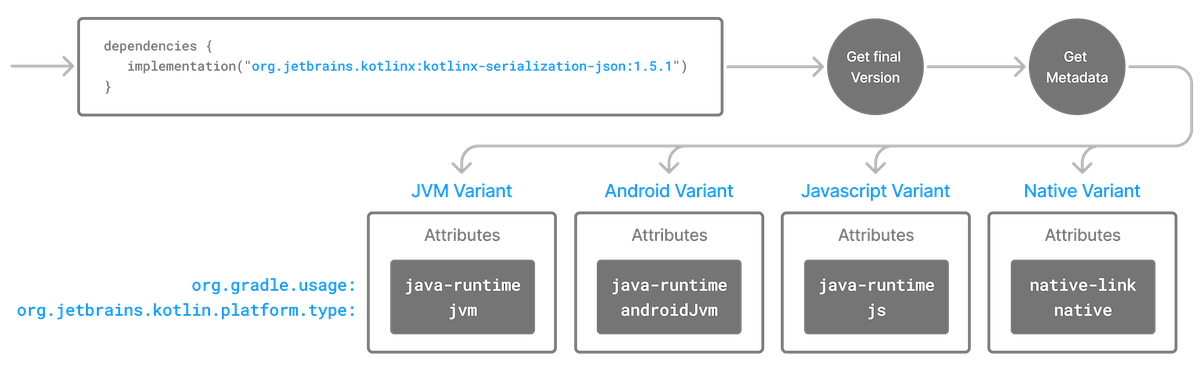

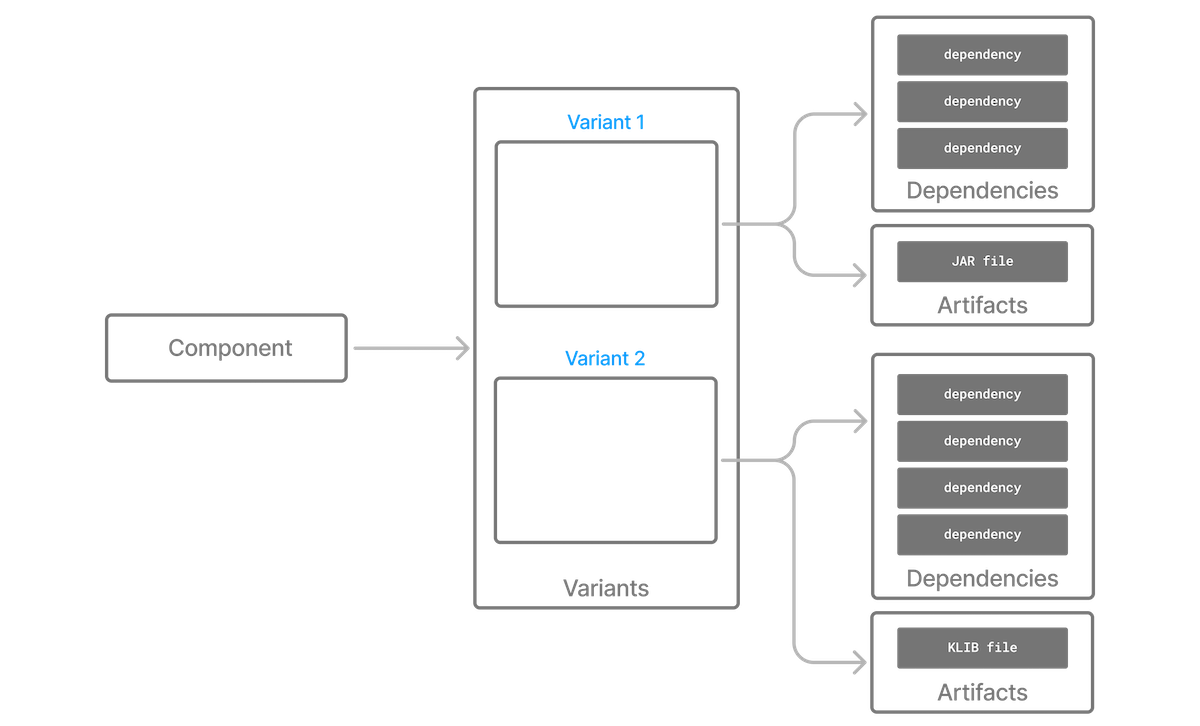

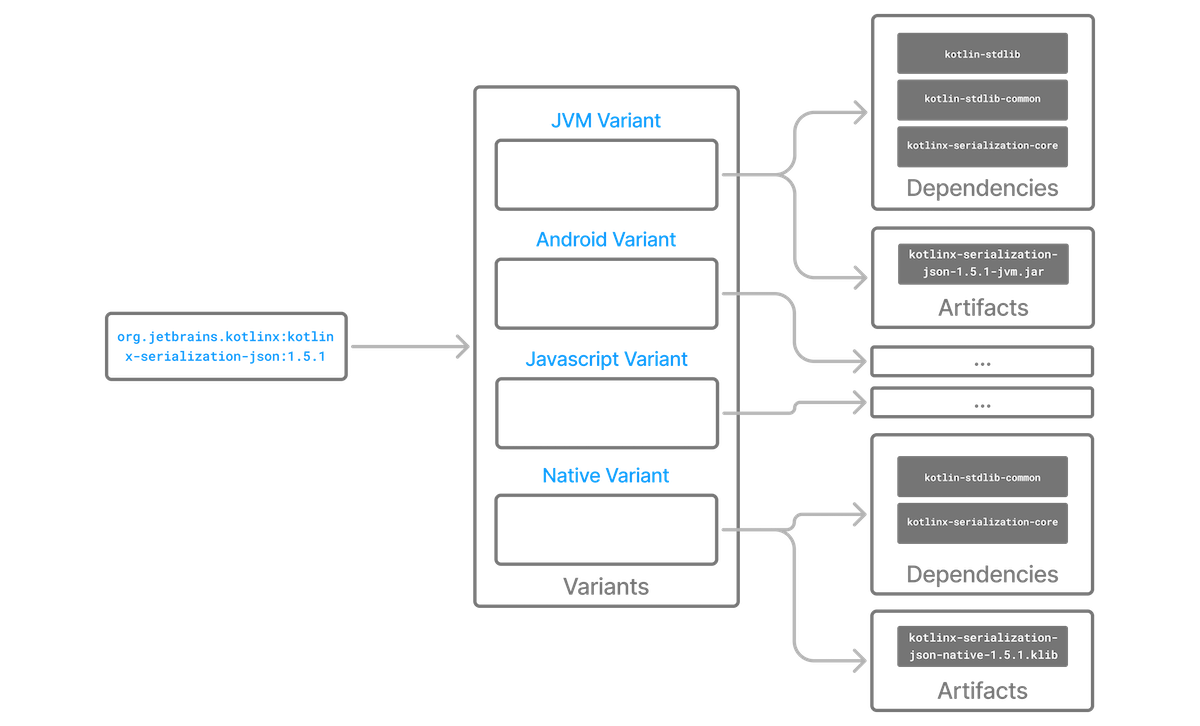

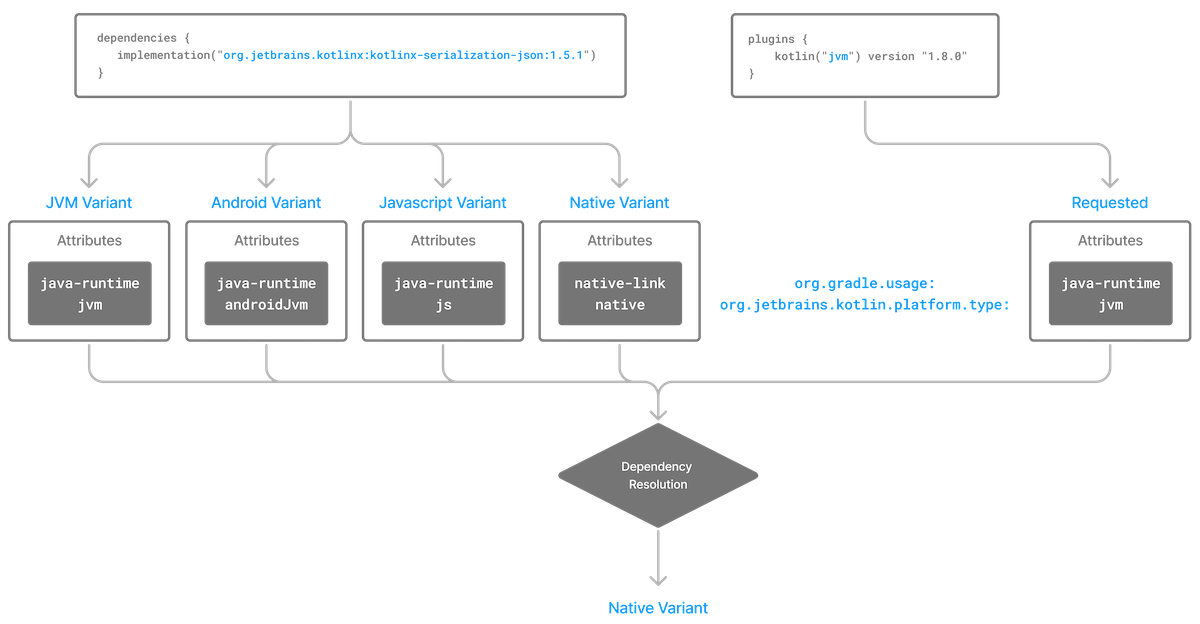

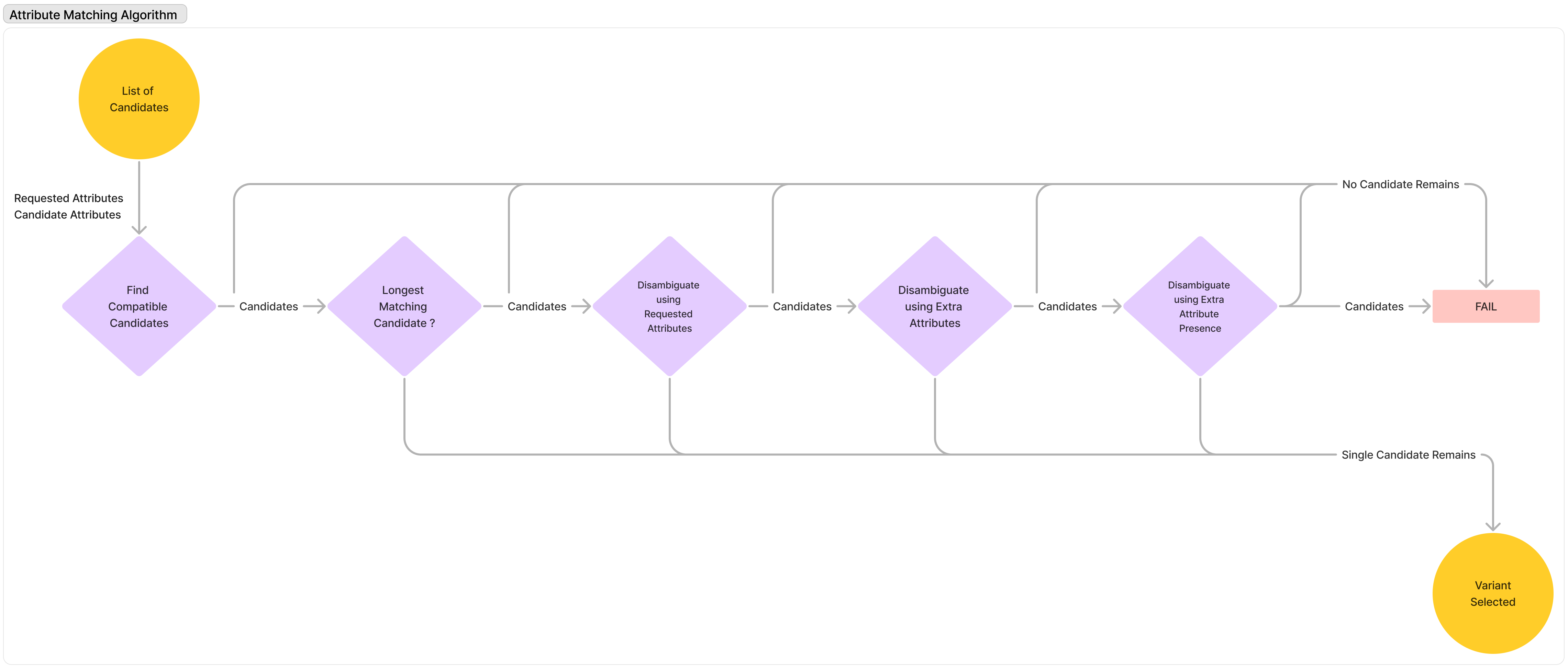

- Variant Selection and Attribute Matching

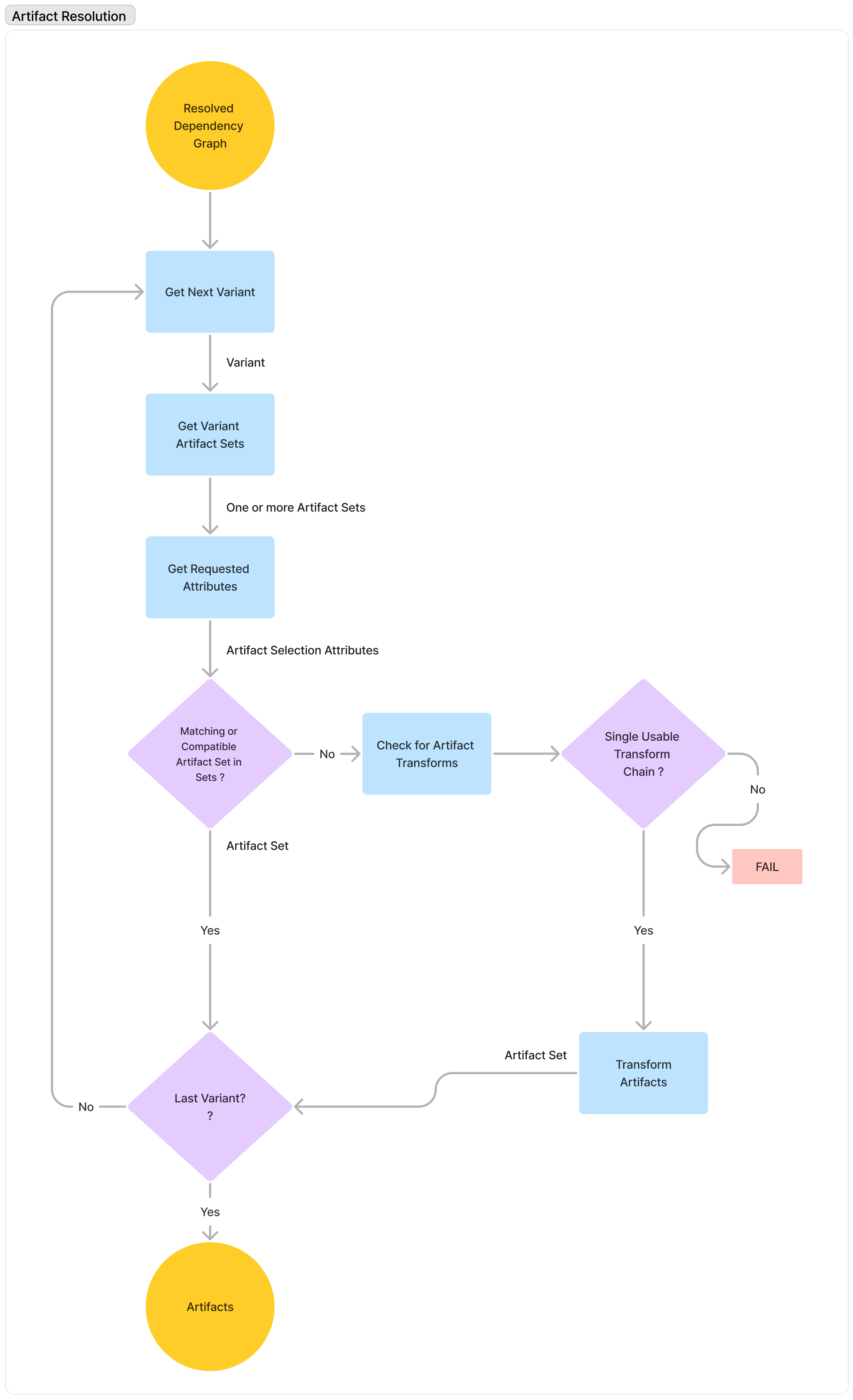

- Artifact Resolution

- Declaring Dependencies

- Viewing Dependencies

- Declaring Versions and Ranges

- Declaring Dependency Constraints

- Creating Dependency Configurations

- Gradle distribution-specific dependencies

- Verifying dependencies

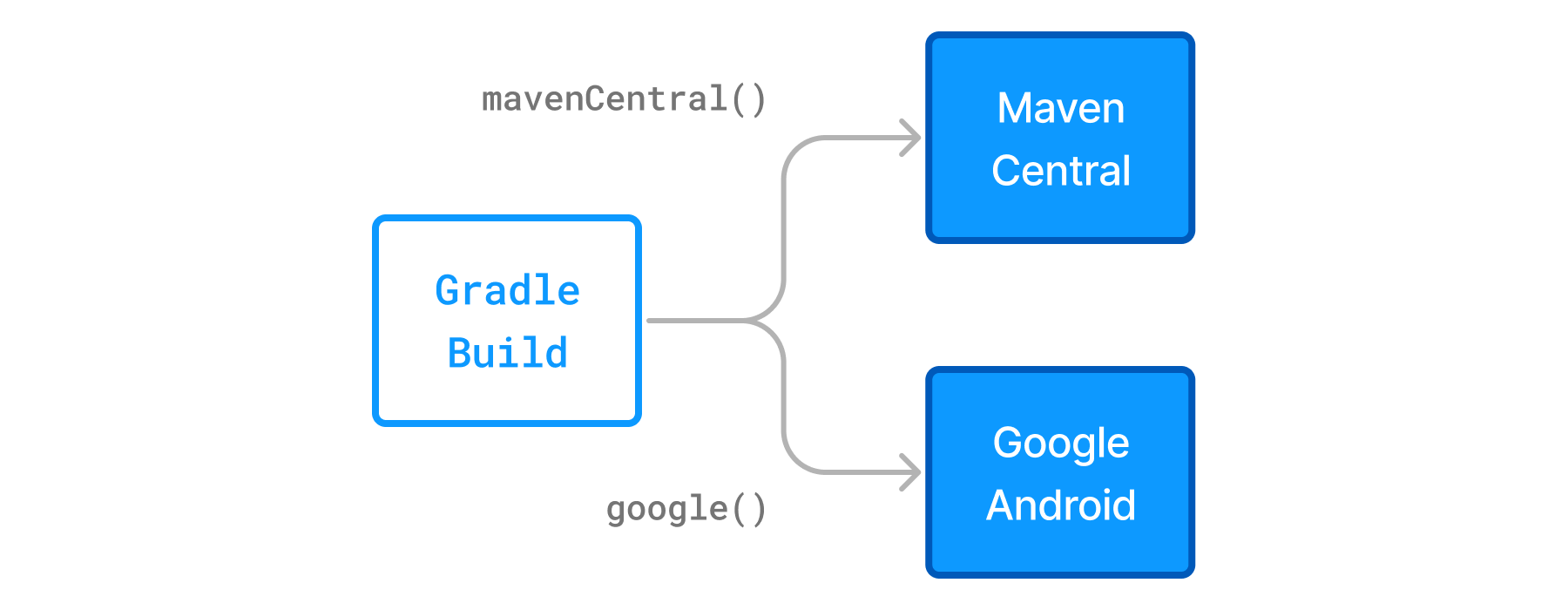

- Declaring Repositories Basics

- Centralizing Repository Declarations

- Repository Types

- Metadata Formats

- Supported Protocols

- Filtering Repository Content

- Platforms

- Version Catalogs

- Using Catalogs with Platforms

- Locking Versions

- Using Resolution Rules

- Modifying Dependency Metadata

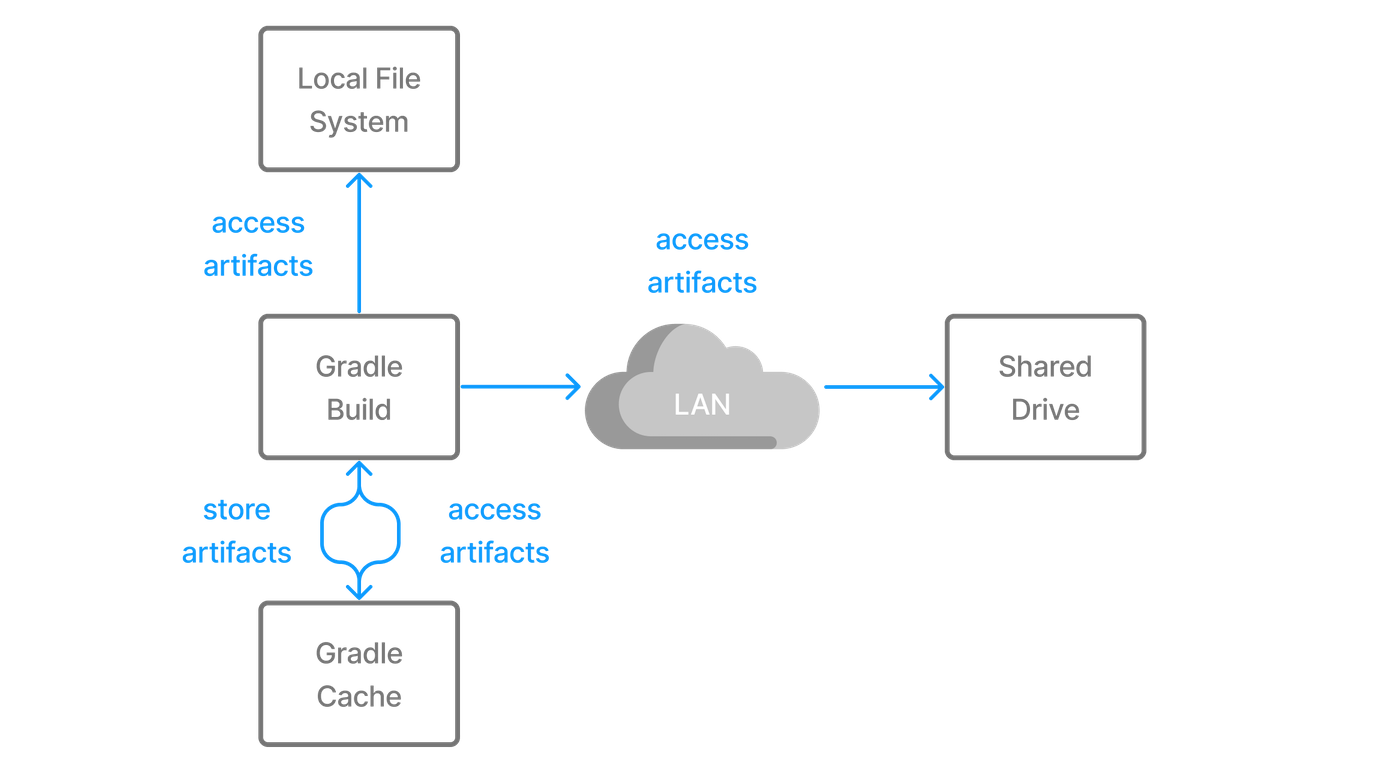

- Dependency Caching

- Dependency Resolution Consistency

- Resolving Specific Artifacts

- Capabilities

- Variants and Attributes

- Artifact Views

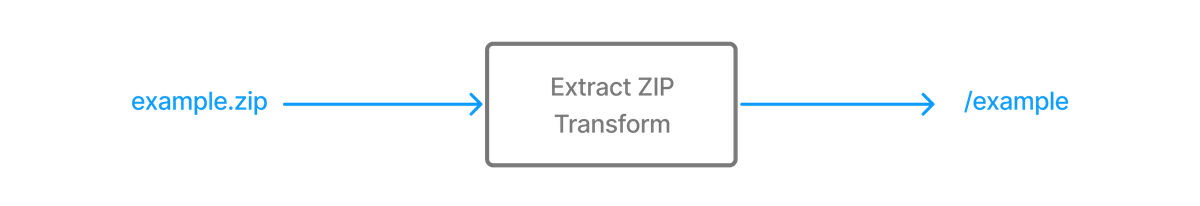

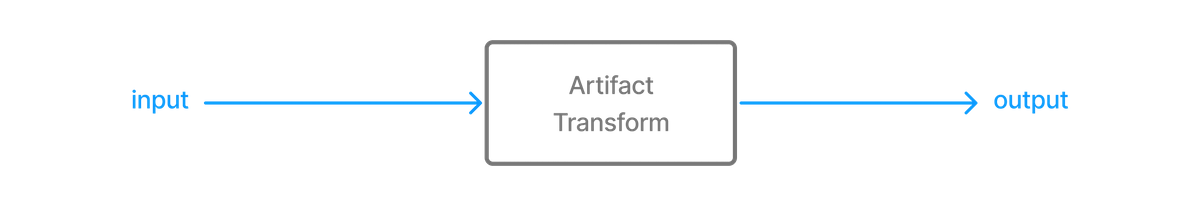

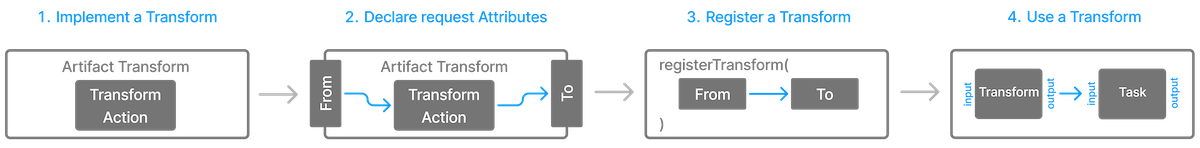

- Artifact Transforms

- Publishing a project as module

- Gradle Module Metadata

- Signing artifacts

- Customizing publishing

- The Maven Publish Plugin

- The Ivy Publish Plugin

- GRADLE MANAGED TYPES

- STRUCTURING BUILDS

- TASK DEVELOPMENT

- PLUGIN DEVELOPMENT

- JVM BUILDS

- C++ BUILDS

- SWIFT BUILDS

- OTHER TOPICS

- OPTIMIZING GRADLE BUILDS

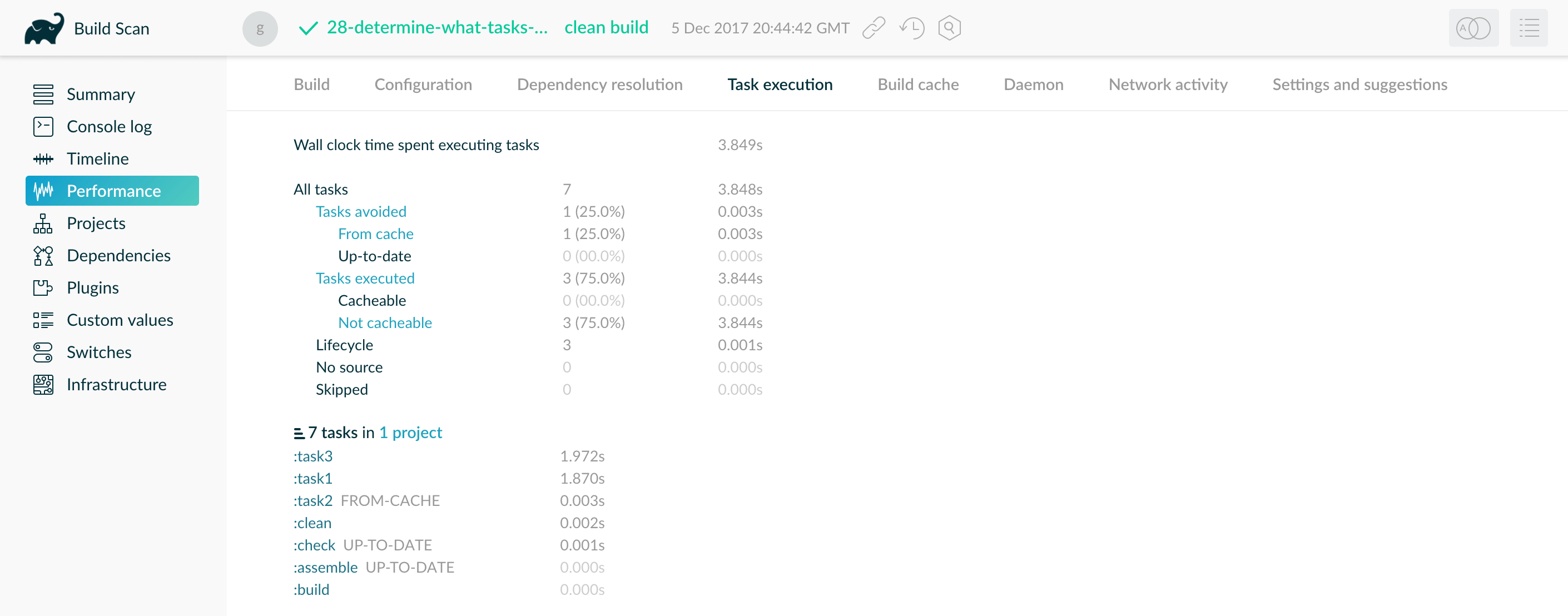

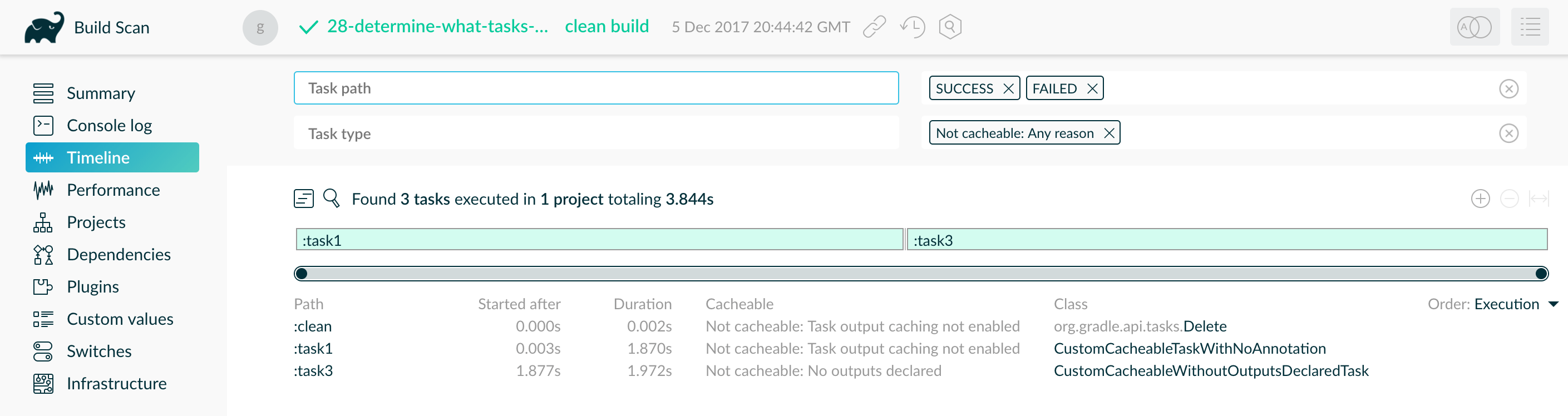

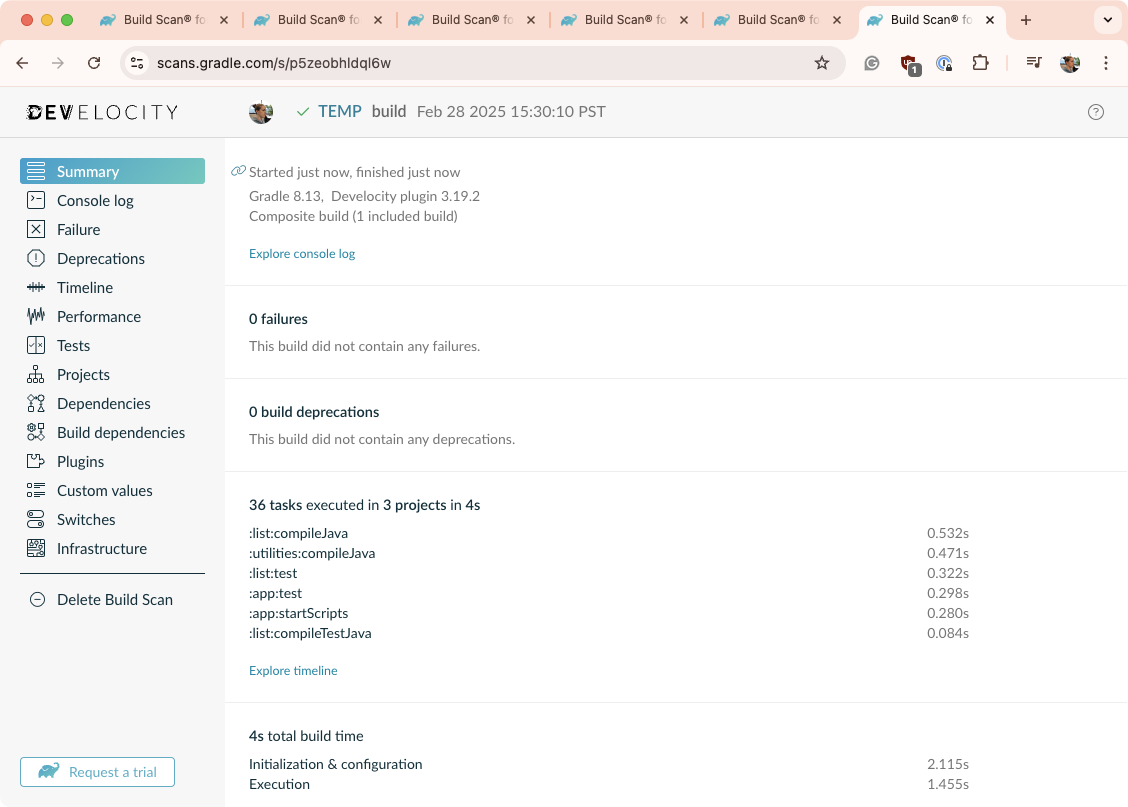

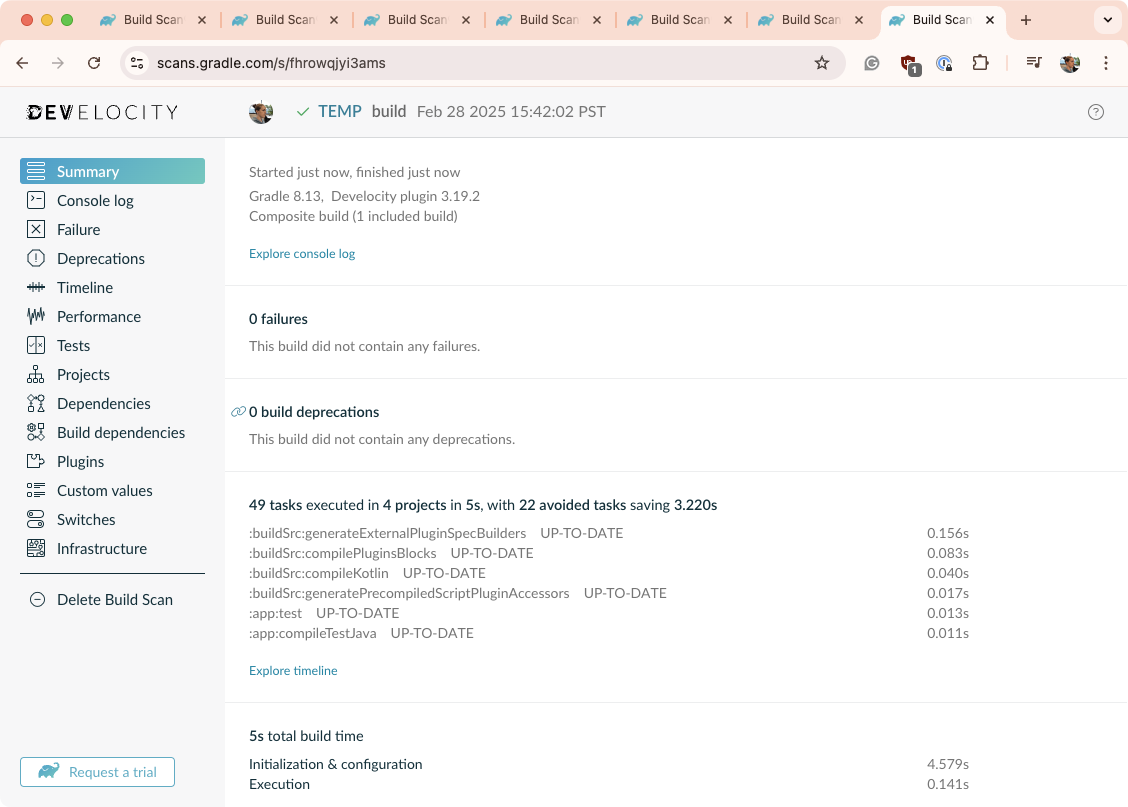

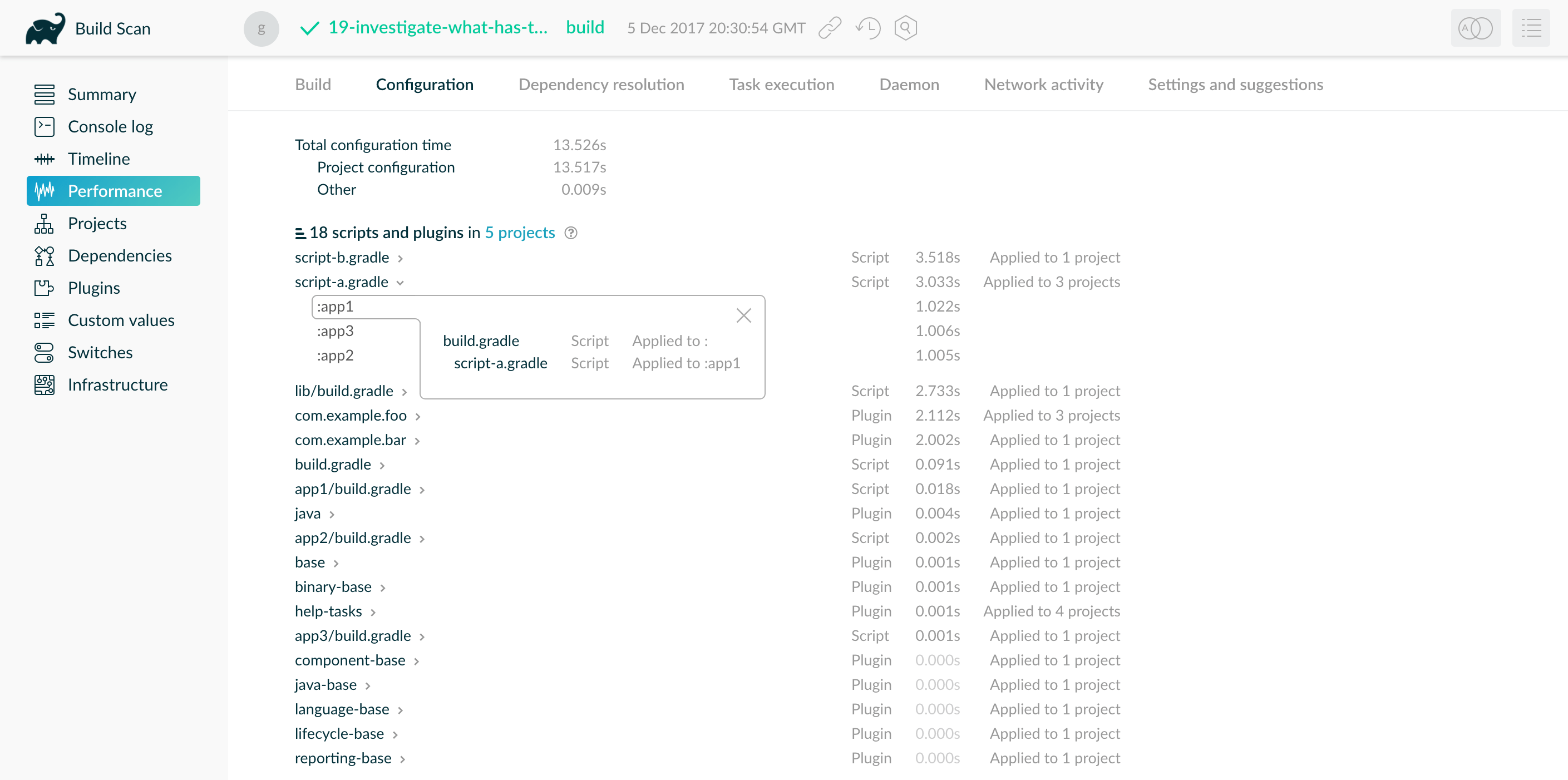

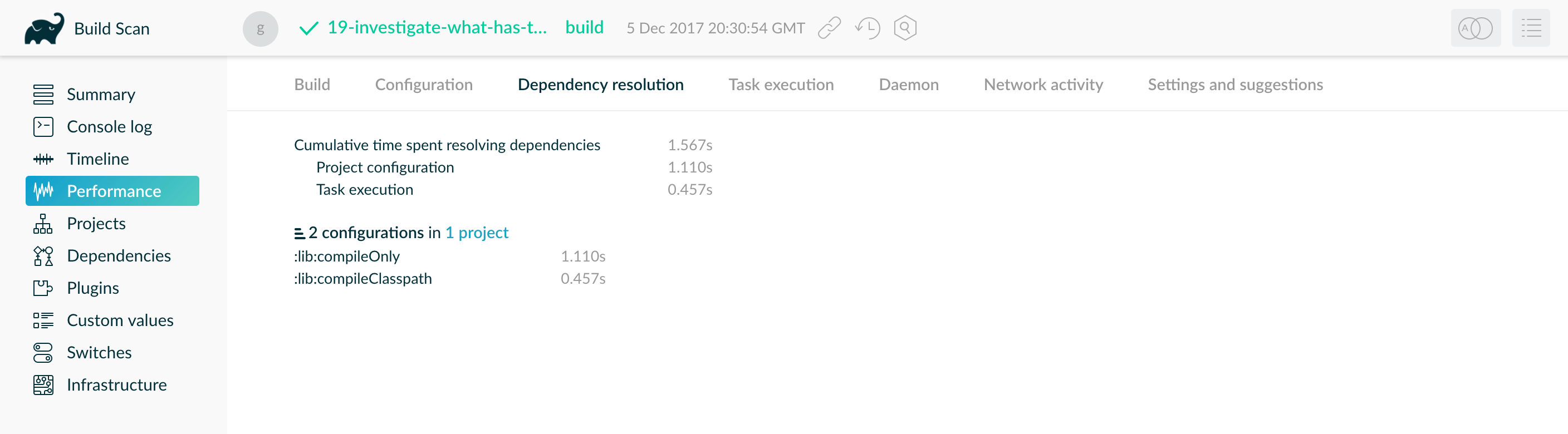

- Improve the Performance of Gradle Builds

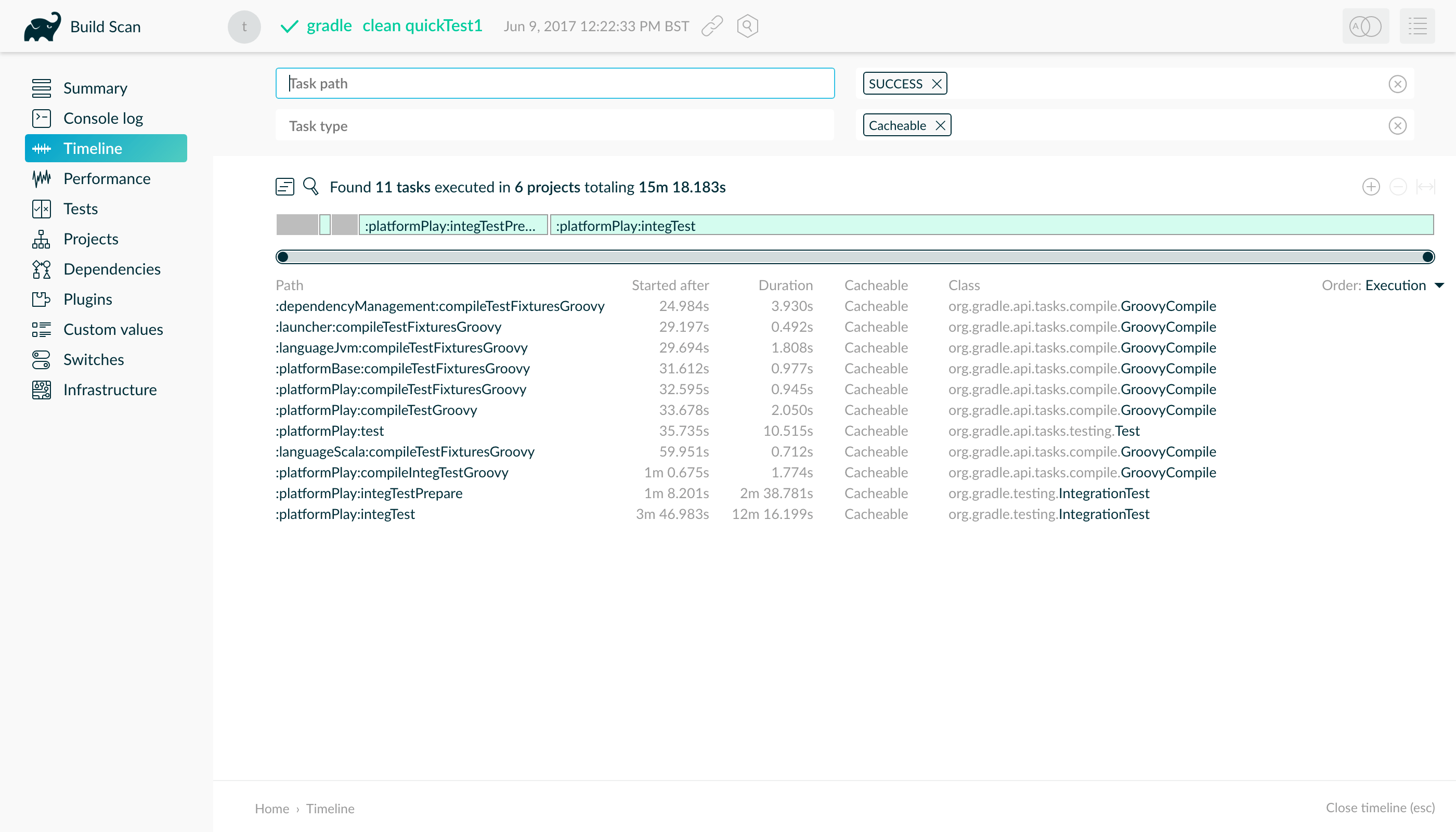

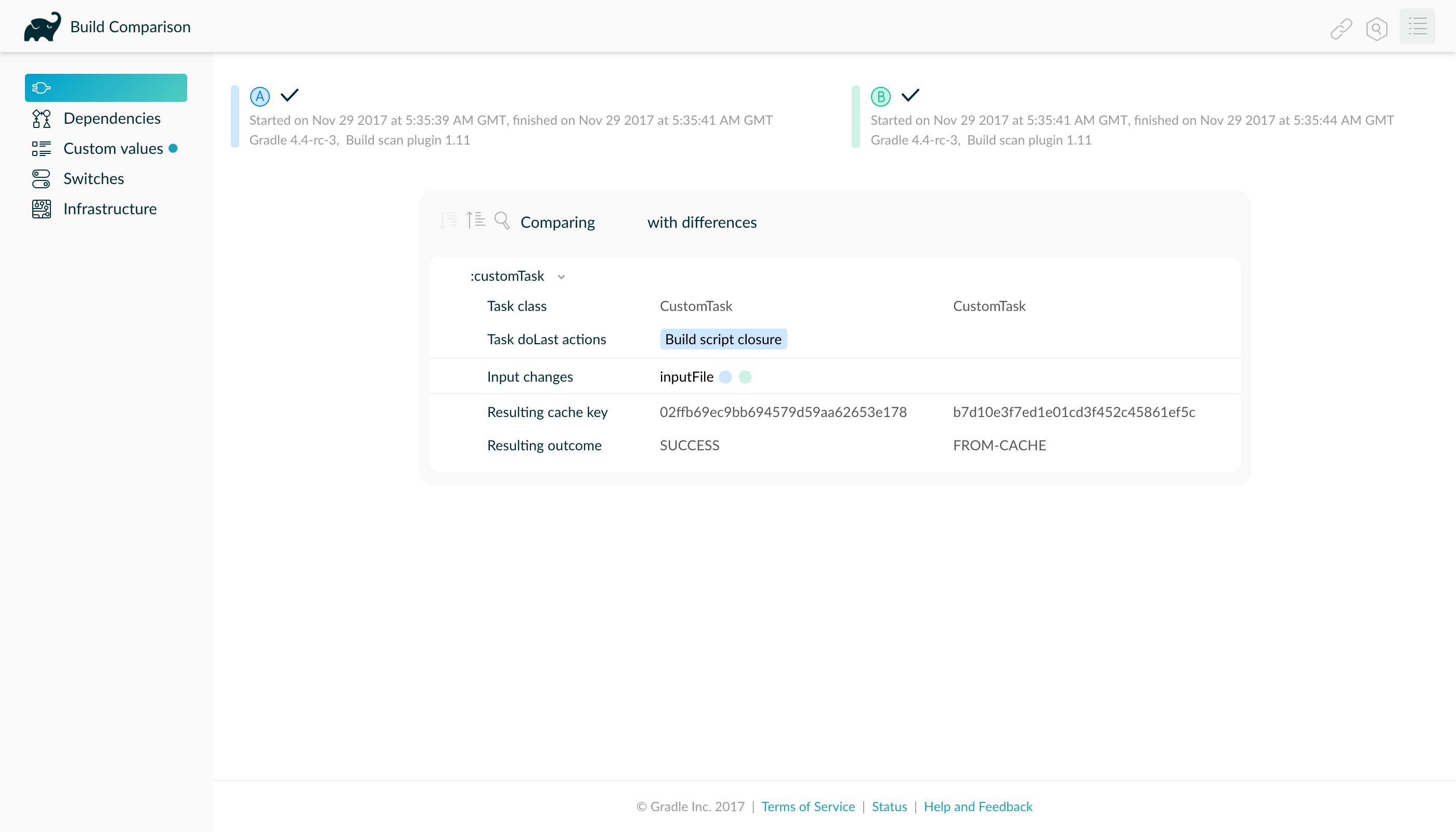

- Build Cache

- Use cases for the build cache

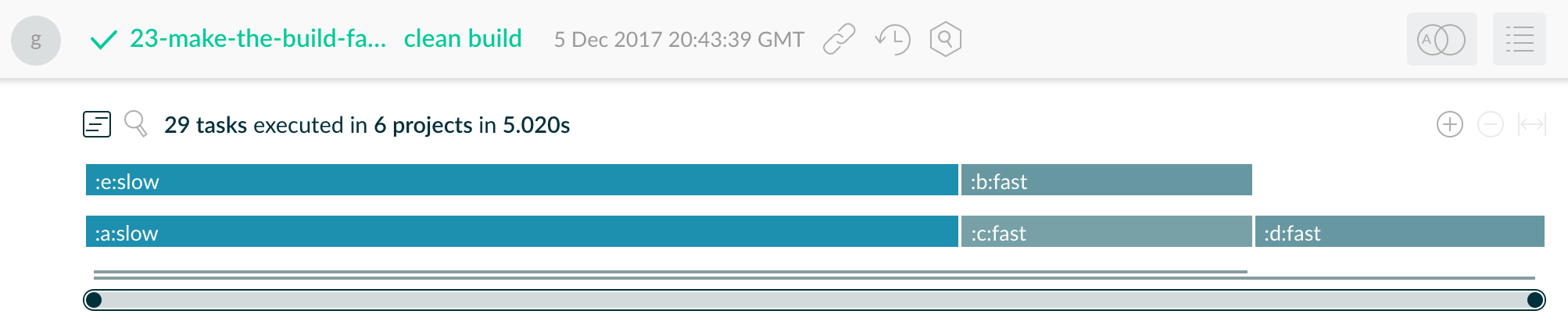

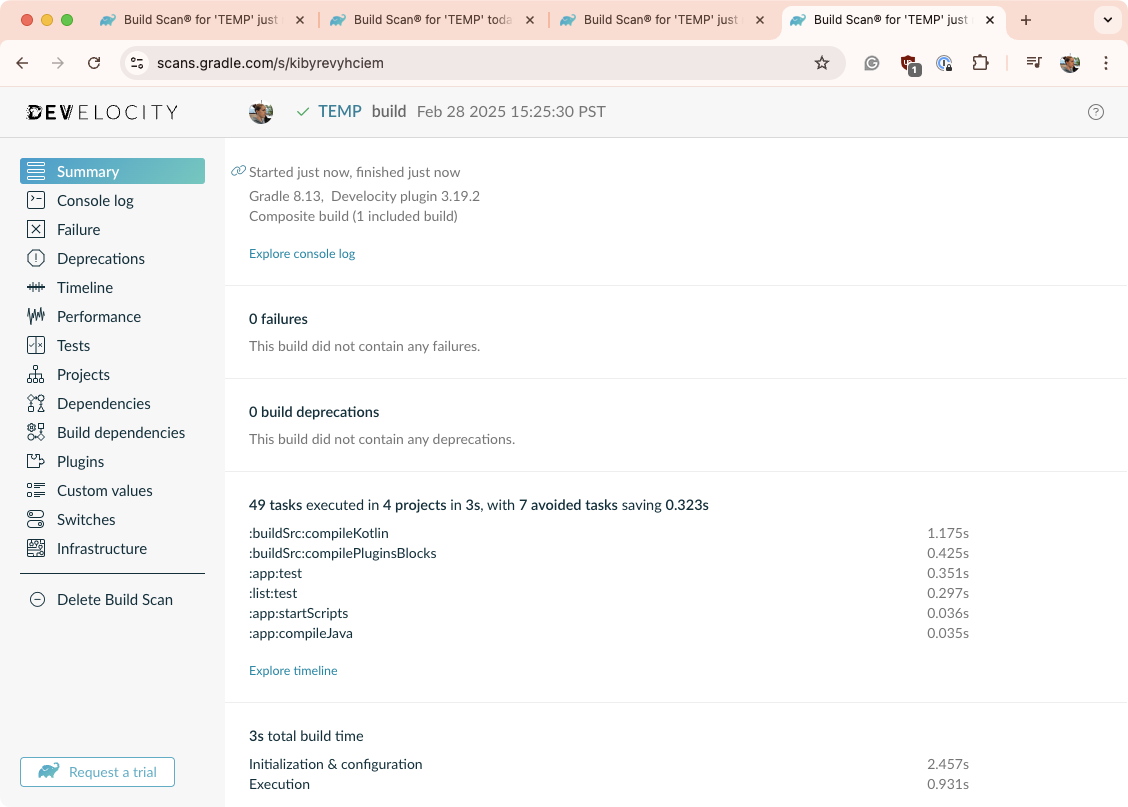

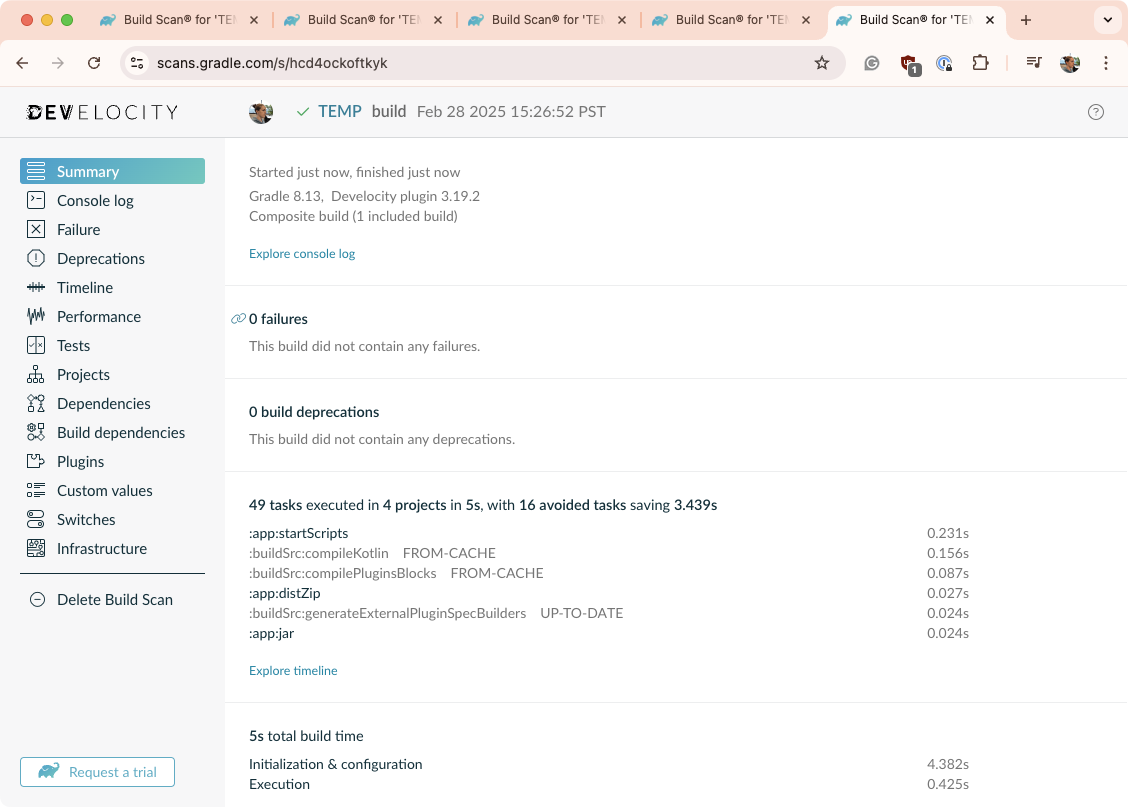

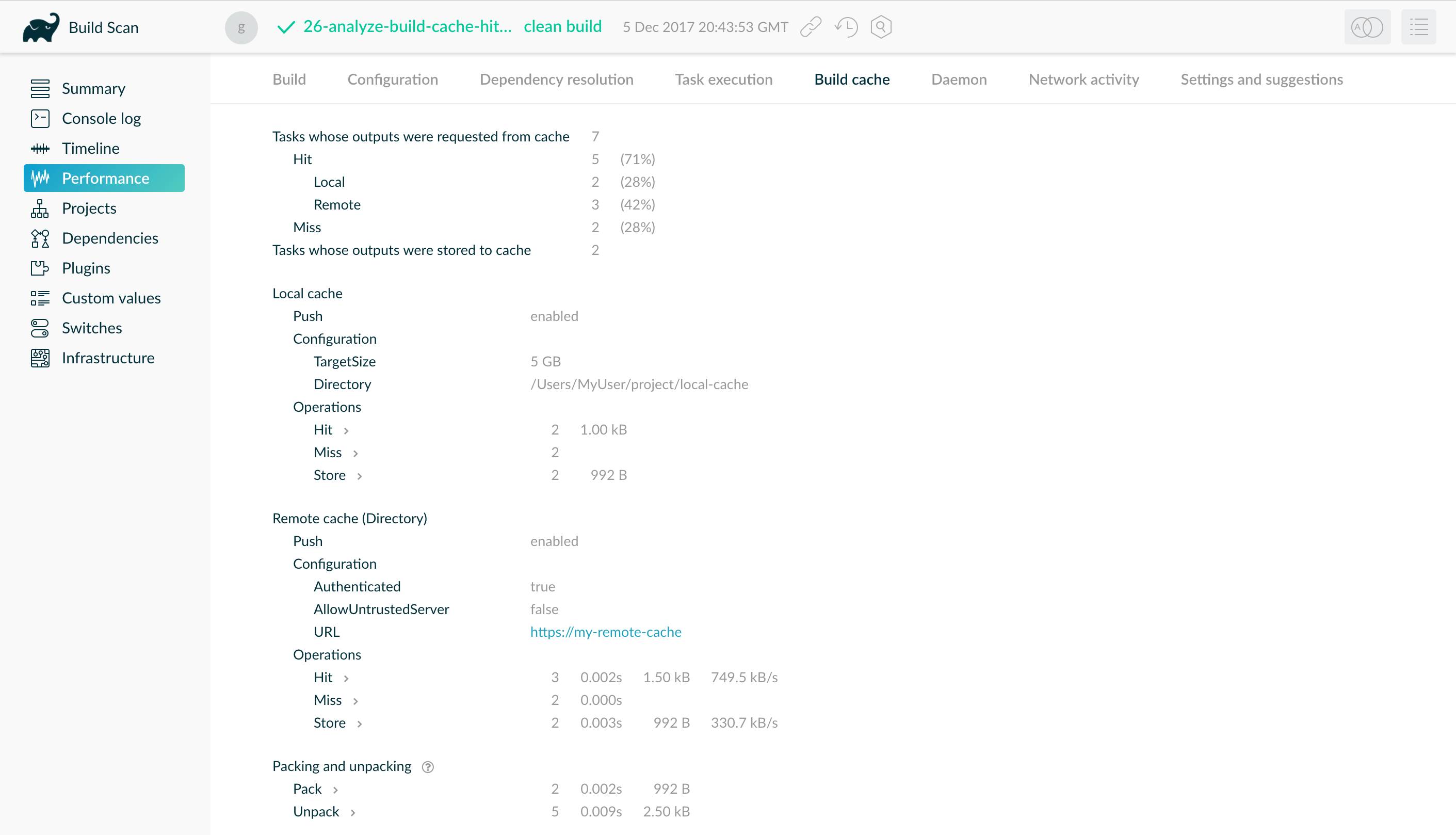

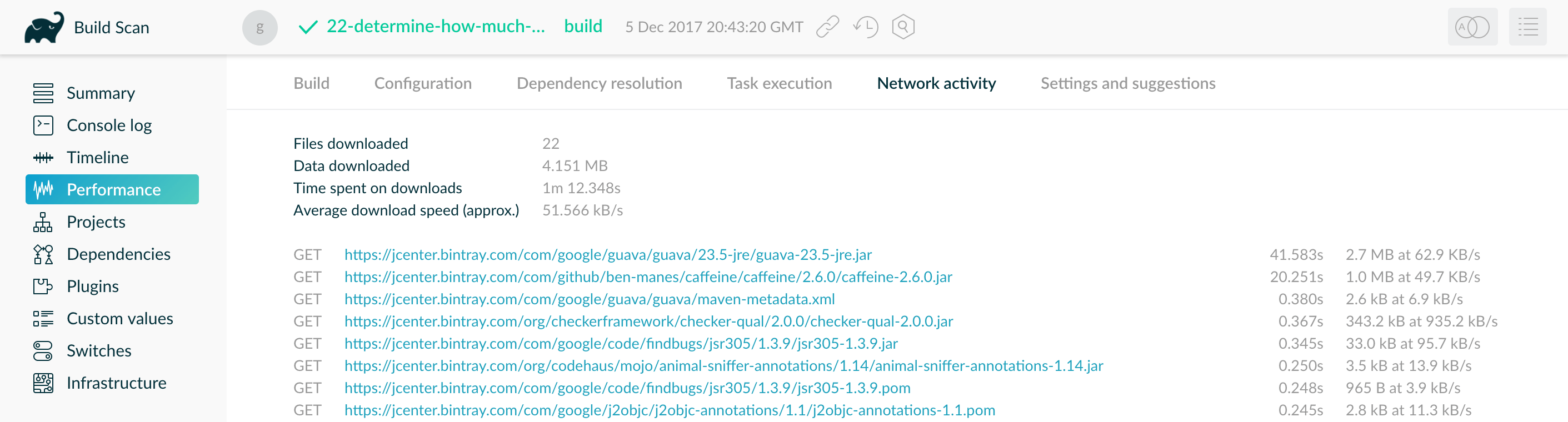

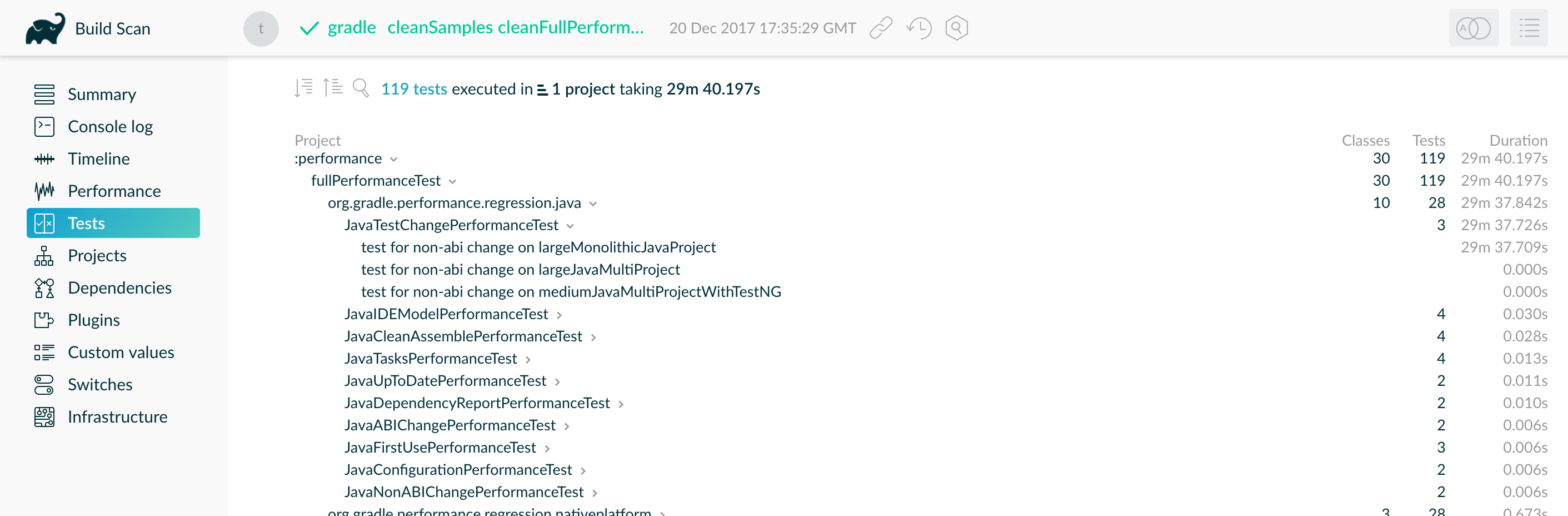

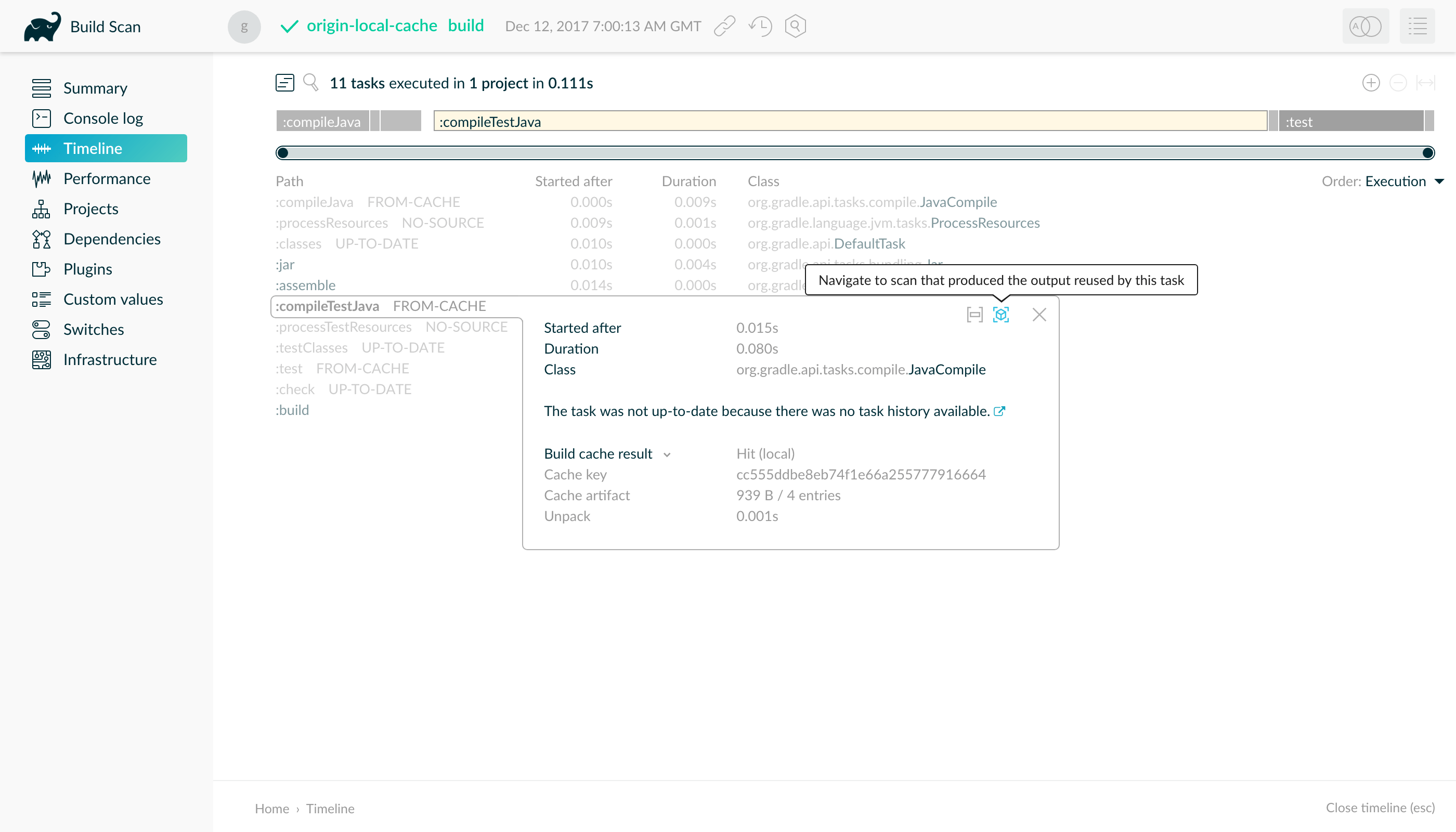

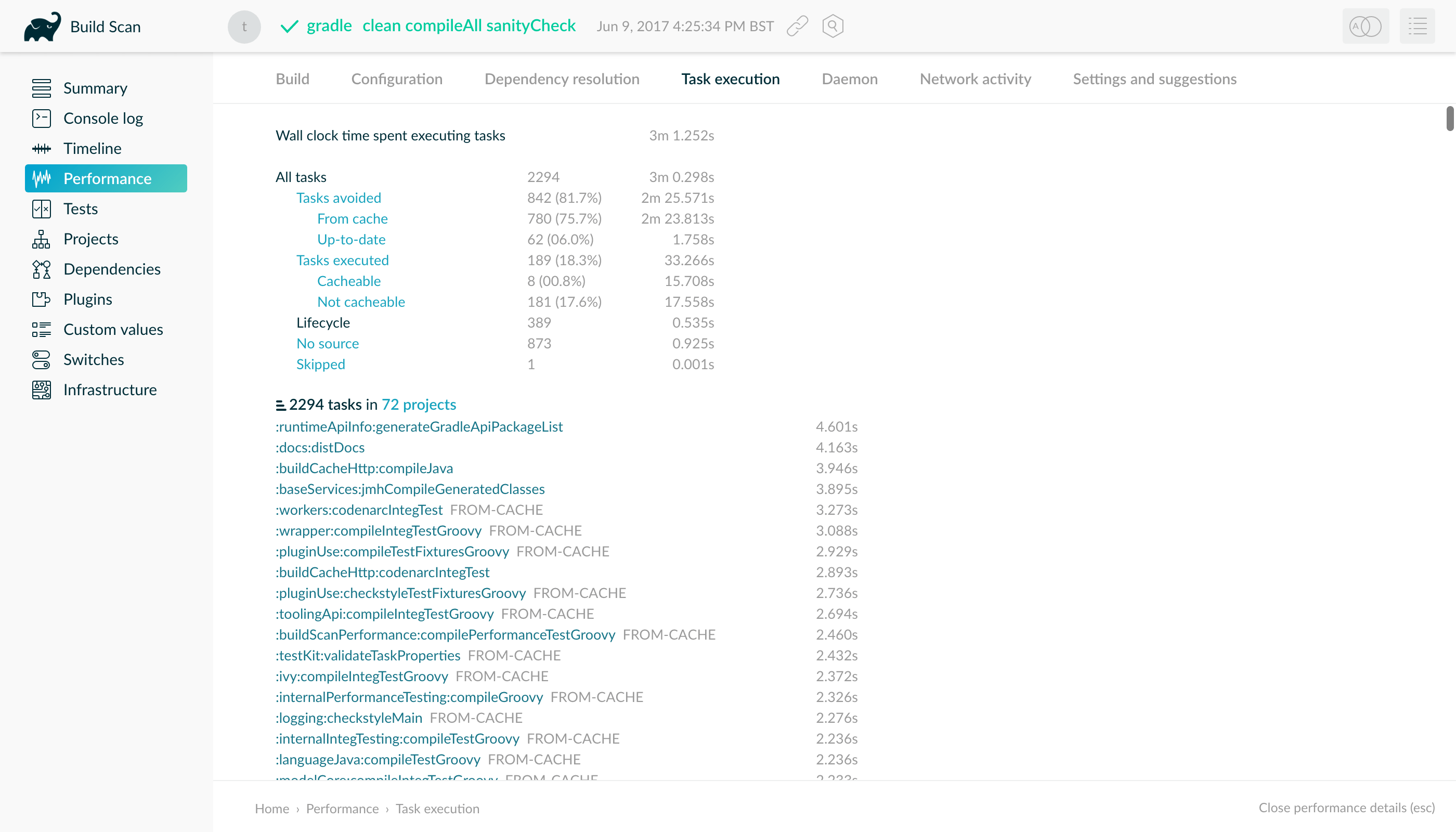

- Build cache performance

- Important concepts

- Caching Java projects

- Caching Android projects

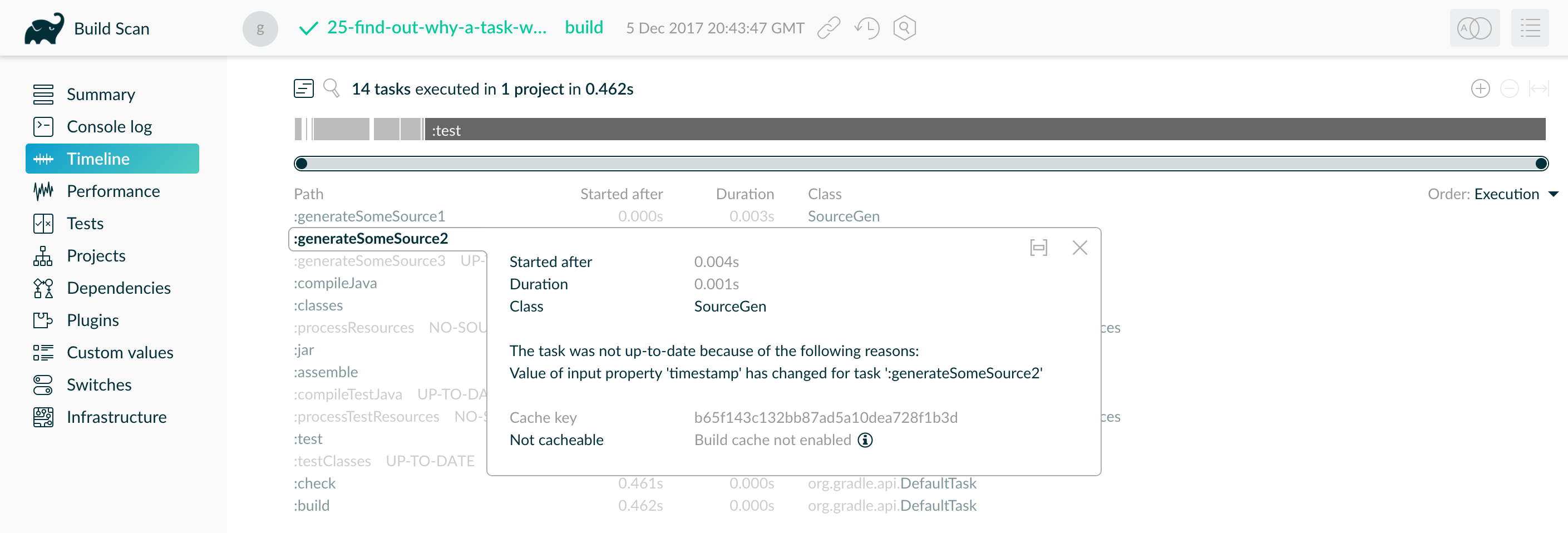

- Debugging and diagnosing Build Cache misses

- Solving common problems

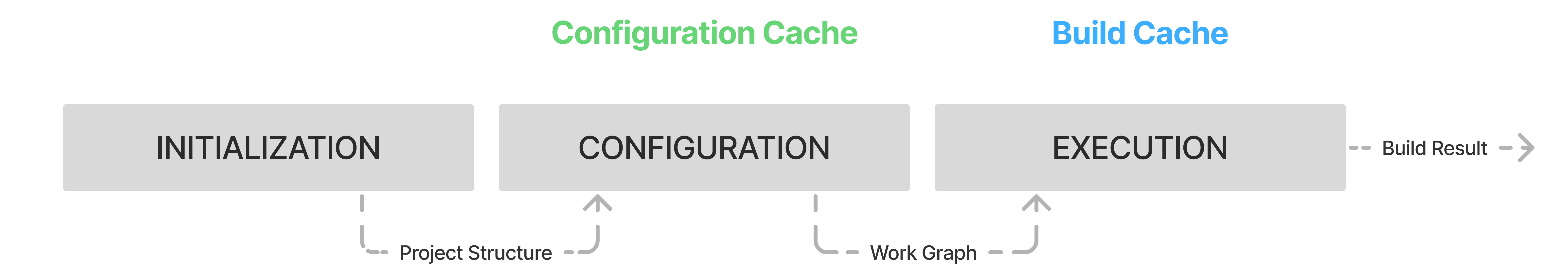

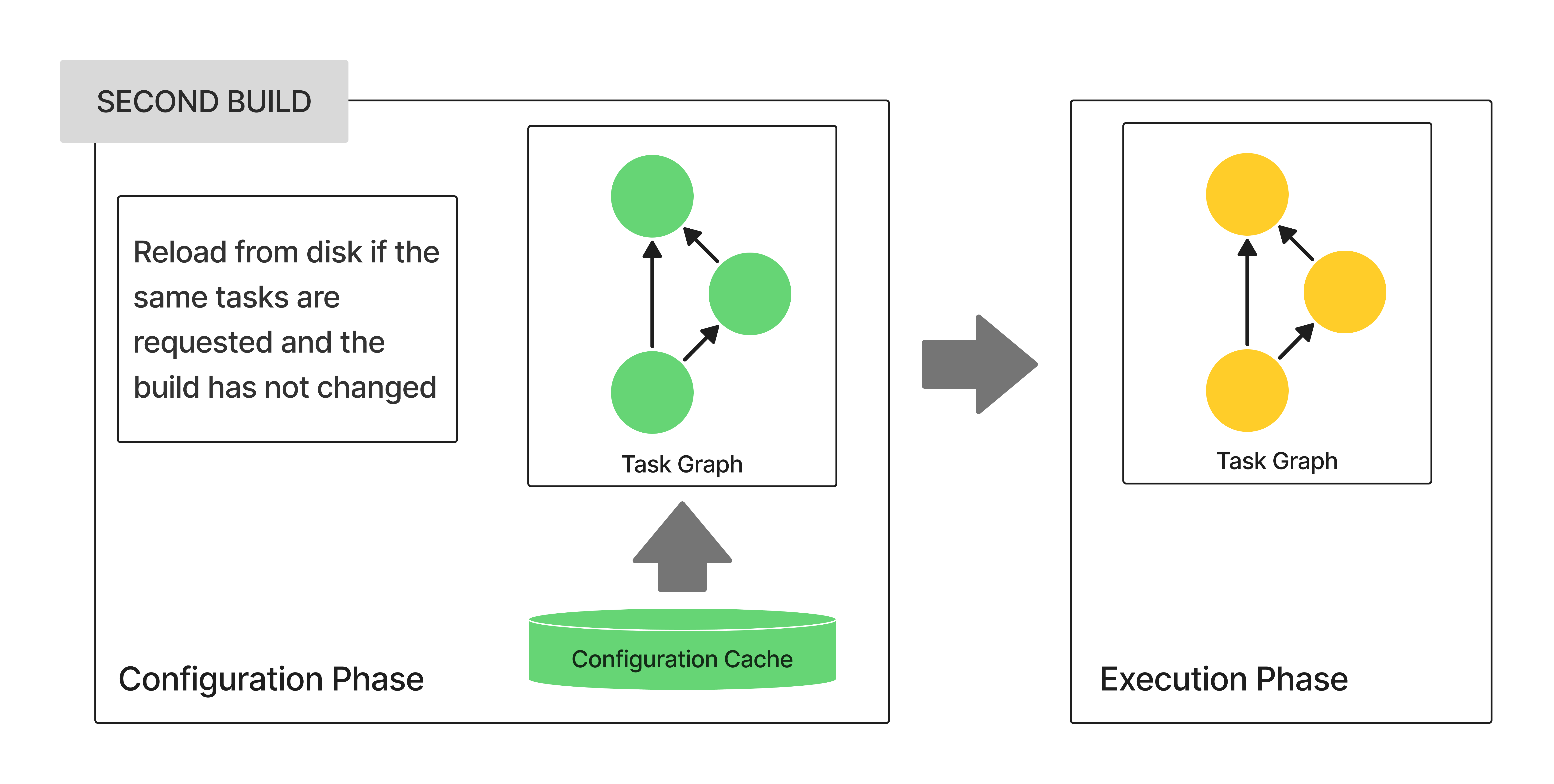

- Configuration Cache

- Enabling and Configuring the Configuration Cache

- Configuration Cache Requirements for your Build Logic

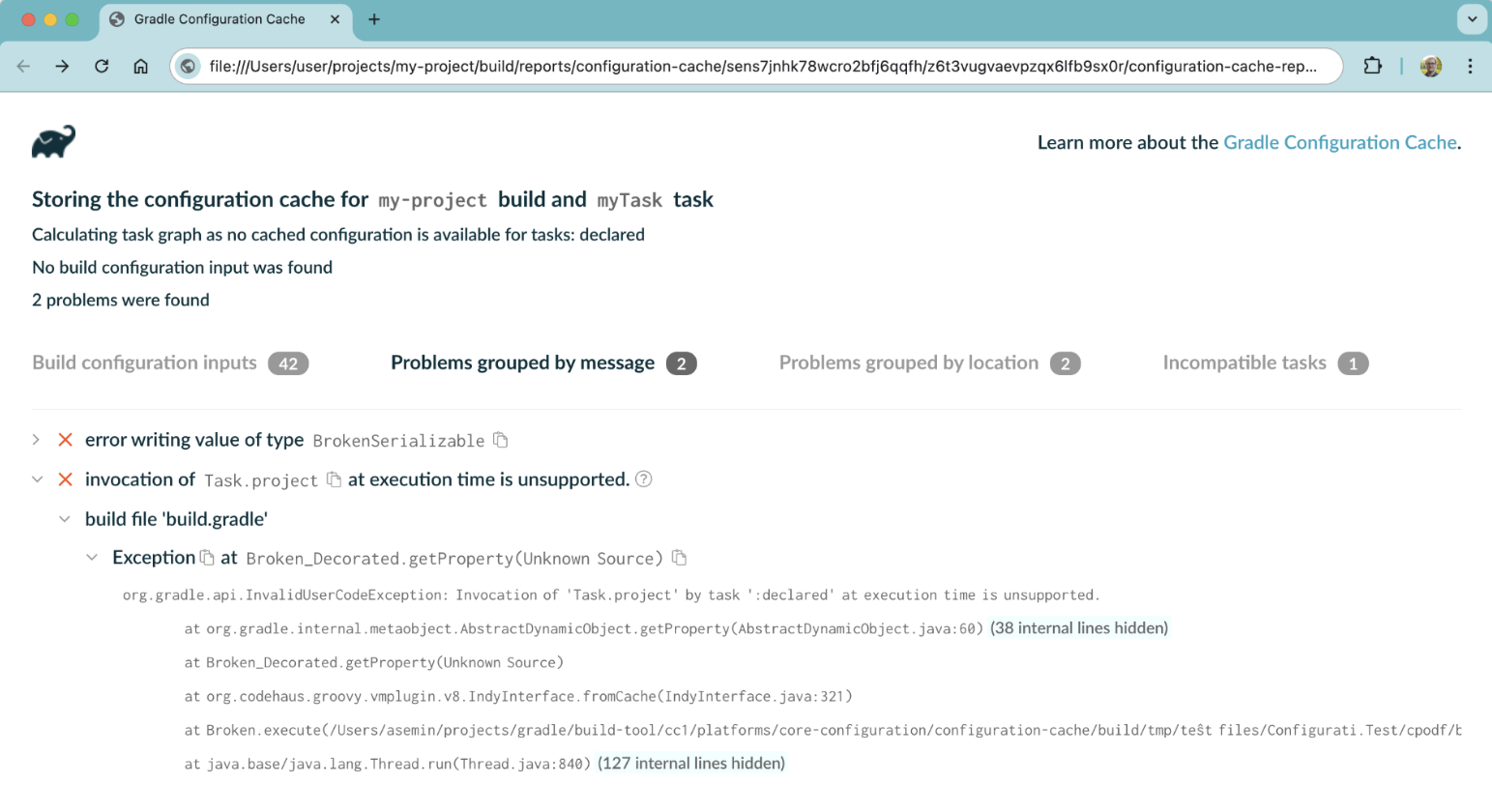

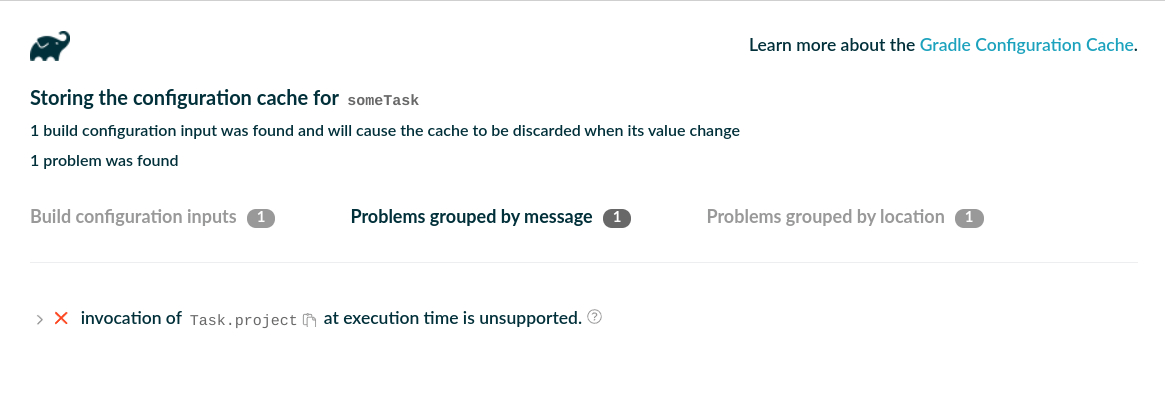

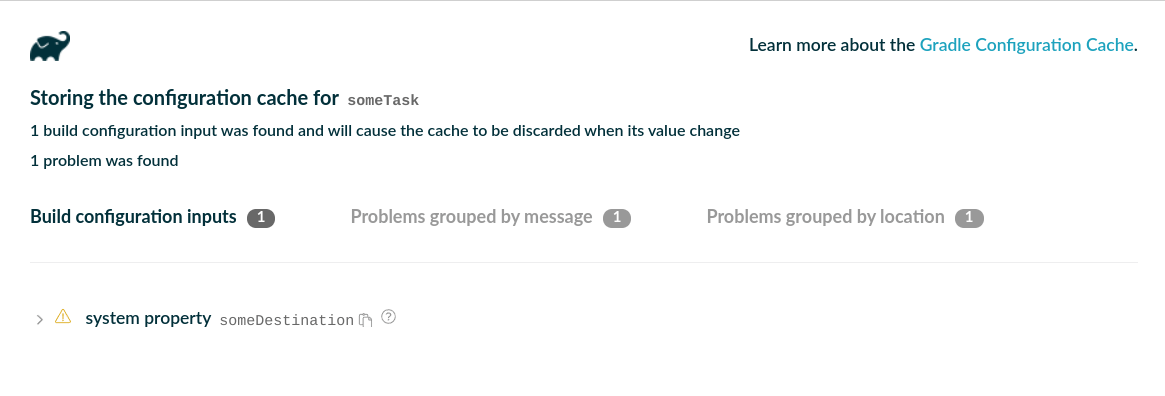

- Debugging and Troubleshooting the Configuration Cache

- Configuration Cache Status

- INTEGRATION

- LICENSE INFORMATION

RELEASES

Installing Gradle

If all you want to do is run an existing Gradle project, then you don’t need to install Gradle if the build uses the Gradle Wrapper.

Gradle Installation

The Gradle Wrapper is identifiable by the presence of the gradlew or gradlew.bat files in the root of the project:

. // (1) ├── gradle │ └── wrapper // (2) ├── gradlew // (3) ├── gradlew.bat // (3) └── ⋮

-

Project root directory.

-

Scripts for executing Gradle builds.

If the gradlew or gradlew.bat files are already present in your project, you do not need to install Gradle.

But you need to make sure your system satisfies Gradle’s prerequisites.

You can follow the steps in the Upgrading Gradle section if you want to update the Gradle version for your project. Please use the Gradle Wrapper to upgrade Gradle.

Android Studio comes with a working installation of Gradle, so you don’t need to install Gradle separately when only working within that IDE.

If you do not meet the criteria above and decide to install Gradle on your machine, first check if Gradle is already installed by running gradle -v in your terminal.

If the command does not return anything, then Gradle is not installed, and you can follow the instructions below.

You can install Gradle Build Tool on Linux, macOS, or Windows. The installation can be done manually or using a package manager like SDKMAN! or Homebrew.

You can find all Gradle releases and their checksums on the releases page.

Prerequisites

Gradle runs on all major operating systems. It requires Java Development Kit (JDK) version 17 or higher to run. You can check the compatibility matrix for more information.

To check, run java -version:

❯ java -version openjdk version "17.0.6" 2023-01-17 OpenJDK Runtime Environment Temurin-17.0.6+10 (build 17.0.6+10) OpenJDK 64-Bit Server VM Temurin-17.0.6+10 (build 17.0.6+10, mixed mode)

Gradle uses the JDK it finds in your path, the JDK used by your IDE, or the JDK specified in your project.

In this example, the $PATH points to JDK17:

❯ echo $PATH /opt/homebrew/opt/openjdk@17/bin

You can also set the JAVA_HOME environment variable to point to a specific JDK installation directory.

This is especially useful when multiple JDKs are installed:

❯ echo %JAVA_HOME% C:\Program Files\Java\jdk17.0_6

❯ echo $JAVA_HOME /Library/Java/JavaVirtualMachines/jdk-17.jdk/Contents/Home

Linux installation

Installing with a package manager

SDKMAN! is a tool for managing parallel versions of multiple Software Development Kits on most Unix-like systems (macOS, Linux, Cygwin, Solaris and FreeBSD). Gradle is deployed and maintained by SDKMAN!:

❯ sdk install gradle

Other package managers are available, but the version of Gradle distributed by them is not controlled by Gradle, Inc. Linux package managers may distribute a modified version of Gradle that is incompatible or incomplete when compared to the official version.

Installing manually

Step 1 - Download the latest Gradle distribution

The distribution ZIP file comes in two flavors:

-

Binary-only (bin)

-

Complete (all) with docs and sources

We recommend downloading the bin file; it is a smaller file that is quick to download (and the latest documentation is available online).

Step 2 - Unpack the distribution

Unzip the distribution zip file in the directory of your choosing, e.g.:

❯ mkdir /opt/gradle ❯ unzip -d /opt/gradle gradle-9.3.1-bin.zip ❯ ls /opt/gradle/gradle-9.3.1 LICENSE NOTICE bin README init.d lib media

Step 3 - Configure your system environment

To install Gradle, the path to the unpacked files needs to be in your Path.

Configure your PATH environment variable to include the bin directory of the unzipped distribution, e.g.:

❯ export PATH=$PATH:/opt/gradle/gradle-9.3.1/bin

Alternatively, you could also add the environment variable GRADLE_HOME and point this to the unzipped distribution.

Instead of adding a specific version of Gradle to your PATH, you can add $GRADLE_HOME/bin to your PATH.

When upgrading to a different version of Gradle, simply change the GRADLE_HOME environment variable.

export GRADLE_HOME=/opt/gradle/gradle-9.3.1

export PATH=${GRADLE_HOME}/bin:${PATH}

macOS installation

Installing with a package manager

SDKMAN! is a tool for managing parallel versions of multiple Software Development Kits on most Unix-like systems (macOS, Linux, Cygwin, Solaris and FreeBSD). Gradle is deployed and maintained by SDKMAN!:

❯ sdk install gradle

Using Homebrew:

❯ brew install gradle

Using MacPorts:

❯ sudo port install gradle

Other package managers are available, but the version of Gradle distributed by them is not controlled by Gradle, Inc.

Installing manually

Step 1 - Download the latest Gradle distribution

The distribution ZIP file comes in two flavors:

-

Binary-only (bin)

-

Complete (all) with docs and sources

We recommend downloading the bin file; it is a smaller file that is quick to download (and the latest documentation is available online).

Step 2 - Unpack the distribution

Unzip the distribution zip file in the directory of your choosing, e.g.:

❯ mkdir /usr/local/gradle ❯ unzip gradle-9.3.1-bin.zip -d /usr/local/gradle ❯ ls /usr/local/gradle/gradle-9.3.1 LICENSE NOTICE README bin init.d lib

Step 3 - Configure your system environment

To install Gradle, the path to the unpacked files needs to be in your Path.

Configure your PATH environment variable to include the bin directory of the unzipped distribution, e.g.:

❯ export PATH=$PATH:/usr/local/gradle/gradle-9.3.1/bin

Alternatively, you could also add the environment variable GRADLE_HOME and point this to the unzipped distribution.

Instead of adding a specific version of Gradle to your PATH, you can add $GRADLE_HOME/bin to your PATH.

When upgrading to a different version of Gradle, simply change the GRADLE_HOME environment variable.

It’s a good idea to edit .bash_profile in your home directory to add GRADLE_HOME variable:

export GRADLE_HOME=/usr/local/gradle/gradle-9.3.1 export PATH=$GRADLE_HOME/bin:$PATH

Windows installation

Installing manually

Step 1 - Download the latest Gradle distribution

The distribution ZIP file comes in two flavors:

-

Binary-only (bin)

-

Complete (all) with docs and sources

We recommend downloading the bin file.

Step 2 - Unpack the distribution

Create a new directory C:\Gradle with File Explorer.

Open a second File Explorer window and go to the directory where the Gradle distribution was downloaded. Double-click the ZIP archive to expose the content.

Drag the content folder gradle-9.3.1 to your newly created C:\Gradle folder.

Alternatively, you can unpack the Gradle distribution ZIP into C:\Gradle using the archiver tool of your choice.

Step 3 - Configure your system environment

To install Gradle, the path to the unpacked files needs to be in your Path.

In File Explorer right-click on the This PC (or Computer) icon, then click Properties → Advanced System Settings → Environmental Variables.

Under System Variables select Path, then click Edit.

Add an entry for C:\Gradle\gradle-9.3.1\bin.

Click OK to save.

Alternatively, you can add the environment variable GRADLE_HOME and point this to the unzipped distribution.

Instead of adding a specific version of Gradle to your Path, you can add %GRADLE_HOME%\bin to your Path.

When upgrading to a different version of Gradle, just change the GRADLE_HOME environment variable.

Verify the installation

Open a console (or a Windows command prompt) and run gradle -v to run gradle and display the version, e.g.:

❯ gradle -v ------------------------------------------------------------ Gradle 9.0.0 ------------------------------------------------------------ Build time: 2025-07-17 12:48:00 UTC Revision: 2db9560bb68c367a265b10516c856c840f9bed8d Kotlin: 2.2.0 Groovy: 4.0.28 Ant: Apache Ant(TM) version 1.10.15 compiled on August 25 2024 Launcher JVM: 17.0.11 (Amazon.com Inc. 17.0.11+9-LTS) Daemon JVM: Compatible with Java 17, any vendor, nativeImageCapable=false (from gradle/gradle-daemon-jvm.properties) OS: Mac OS X 14.7.4 aarch64

You can verify the integrity of the Gradle distribution by downloading the SHA-256 file (available from the releases page) and following these verification instructions.

Compatibility Matrix

The sections below describe Gradle’s compatibility with several integrations. Versions not listed here may or may not work.

Java Runtime

Gradle runs on the Java Virtual Machine (JVM), which is often provided by either a JDK or JRE. A JVM version between 17 and 25 is required to execute Gradle. JVM 26 and later versions are not yet supported.

The Gradle wrapper, Gradle client, Tooling API client, and TestKit client are compatible with JVM 8.

JDK 6 and above can be used for compilation. JVM 8 and above can be used for executing tests.

Any fully supported version of Java can be used for compilation or testing. However, the latest Java version may only be supported for compilation or testing, not for running Gradle. Support is achieved using toolchains and applies to all tasks supporting toolchains.

See the table below for the Java version supported by a specific Gradle release:

| Java version | Support for toolchains | Support for running Gradle |

|---|---|---|

8 |

N/A |

2.0 to 8.14.x |

9 |

N/A |

4.3 to 8.14.x |

10 |

N/A |

4.7 to 8.14.x |

11 |

N/A |

5.0 to 8.14.x |

12 |

N/A |

5.4 to 8.14.x |

13 |

N/A |

6.0 to 8.14.x |

14 |

N/A |

6.3 to 8.14.x |

15 |

6.7 |

6.7 to 8.14.x |

16 |

7.0 |

7.0 to 8.14.x |

17 |

7.3 |

7.3 and after |

18 |

7.5 |

7.5 and after |

19 |

7.6 |

7.6 and after |

20 |

8.1 |

8.3 and after |

21 |

8.4 |

8.5 and after |

22 |

8.7 |

8.8 and after |

23 |

8.10 |

8.10 and after |

24 |

8.14 |

8.14 and after |

25 |

9.1.0 |

9.1.0 and after |

26 |

N/A |

N/A |

|

Note

|

We only list versions in the table above once we have tested that they work without any warnings. However, thanks to the toolchain support, Gradle will often work with the latest Java version before then. We encourage users to try it out and let us know. |

Kotlin

Gradle is tested with Kotlin 2.0.0 through 2.3.0-RC. Beta and RC versions may or may not work.

| Embedded Kotlin version | Minimum Gradle version | Kotlin Language version |

|---|---|---|

1.3.10 |

5.0 |

1.3 |

1.3.11 |

5.1 |

1.3 |

1.3.20 |

5.2 |

1.3 |

1.3.21 |

5.3 |

1.3 |

1.3.31 |

5.5 |

1.3 |

1.3.41 |

5.6 |

1.3 |

1.3.50 |

6.0 |

1.3 |

1.3.61 |

6.1 |

1.3 |

1.3.70 |

6.3 |

1.3 |

1.3.71 |

6.4 |

1.3 |

1.3.72 |

6.5 |

1.3 |

1.4.20 |

6.8 |

1.3 |

1.4.31 |

7.0 |

1.4 |

1.5.21 |

7.2 |

1.4 |

1.5.31 |

7.3 |

1.4 |

1.6.21 |

7.5 |

1.4 |

1.7.10 |

7.6 |

1.4 |

1.8.10 |

8.0 |

1.8 |

1.8.20 |

8.2 |

1.8 |

1.9.0 |

8.3 |

1.8 |

1.9.10 |

8.4 |

1.8 |

1.9.20 |

8.5 |

1.8 |

1.9.22 |

8.7 |

1.8 |

1.9.23 |

8.9 |

1.8 |

1.9.24 |

8.10 |

1.8 |

2.0.20 |

8.11 |

1.8 |

2.0.21 |

8.12 |

1.8 |

2.2.0 |

9.0.0 |

2.2 |

2.2.20 |

9.2.0 |

2.2 |

2.2.21 |

9.3.0 |

2.2 |

Groovy

Gradle is tested with Groovy 1.5.8 through 5.0.2.

Gradle plugins written in Groovy must use Groovy 4.x for compatibility with Gradle and Groovy DSL build scripts.

Android

Gradle is tested with Android Gradle Plugin 8.13 through 9.0.0-beta02. Alpha and beta versions may or may not work.

Target Platforms

Gradle supports a defined set of platform targets, which are combinations of:

-

Operating system and version

-

Architecture

-

File system watching compatibility

The following table lists the officially supported platforms for Gradle:

| OS | Architecture |

|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

Note

|

Currently, all Gradle tests run with the default file-systems of the platform, i.e. ext4 for Ubuntu, Amazon Linux and CentOS, NTFS for Windows, and APFS for macOS.

|

Platforms not listed above may work with Gradle but are not actively tested.

The Feature Lifecycle

Gradle is under constant development. New versions are delivered on a regular and frequent basis (approximately every six weeks) as described in the section on end-of-life support.

Continuous improvement combined with frequent delivery allows new features to be available to users early. Early users provide invaluable feedback, which is incorporated into the development process.

Getting new functionality into the hands of users regularly is a core value of the Gradle platform.

At the same time, API and feature stability are taken very seriously and considered a core value of the Gradle platform. Design choices and automated testing are engineered into the development process and formalized by the section on backward compatibility.

The Gradle feature lifecycle has been designed to meet these goals. It also communicates to users of Gradle what the state of a feature is. The term feature typically means an API or DSL method or property in this context, but it is not restricted to this definition. Command line arguments and modes of execution (e.g. the Build Daemon) are two examples of other features.

1. Internal

Internal features are not designed for public use and are only intended to be used by Gradle itself. They can change in any way at any point in time without any notice. Therefore, we recommend avoiding the use of such features. Internal features are not documented. If it appears in this User Manual, the DSL Reference, or the API Reference, then the feature is not internal.

Internal features may evolve into public features.

2. Incubating

Features are introduced in the incubating state to allow real-world feedback to be incorporated into the feature before making it public. It also gives users willing to test potential future changes early access.

A feature in an incubating state may change in future Gradle versions until it is no longer incubating. Changes to incubating features for a Gradle release will be highlighted in the release notes for that release. The incubation period for new features varies depending on the feature’s scope, complexity, and nature.

Features in incubation are indicated. In the source code, all methods/properties/classes that are incubating are annotated with incubating. This results in a special mark for them in the DSL and API references.

If an incubating feature is discussed in this User Manual, it will be explicitly said to be in the incubating state.

Feature Preview API

The feature preview API allows certain incubating features to be activated by adding enableFeaturePreview('FEATURE') in your settings file.

Individual preview features will be announced in release notes.

When incubating features are either promoted to public or removed, the feature preview flags for them become obsolete, have no effect, and should be removed from the settings file.

3. Public

The default state for a non-internal feature is public. Anything documented in the User Manual, DSL Reference, or API reference that is not explicitly said to be incubating or deprecated is considered public. Features are said to be promoted from an incubating state to public. The release notes for each release indicate which previously incubating features are being promoted by the release.

A public feature will never be removed or intentionally changed without undergoing deprecation. All public features are subject to the backward compatibility policy.

4. Deprecated

Some features may be replaced or become irrelevant due to the natural evolution of Gradle. Such features will eventually be removed from Gradle after being deprecated. A deprecated feature may become stale until it is finally removed according to the backward compatibility policy.

Deprecated features are indicated to be so. In the source code, all methods/properties/classes that are deprecated are annotated with “@java.lang.Deprecated” which is reflected in the DSL and API References. In most cases, there is a replacement for the deprecated element, which will be described in the documentation. Using a deprecated feature will result in a runtime warning in Gradle’s output.

The use of deprecated features should be avoided. The release notes for each release indicate any features being deprecated by the release.

Backward compatibility Policy

Gradle provides backward compatibility across major versions (e.g., 1.x, 2.x, etc.).

Once a public feature is introduced in a Gradle release, it will remain indefinitely unless deprecated.

Once deprecated, it may be removed in the next major release.

Deprecated features may be supported across major releases, but this is not guaranteed.

Release end-of-life Policy

Every day, a new nightly build of Gradle is created.

This contains all of the changes made through Gradle’s extensive continuous integration tests during that day. Nightly builds may contain new changes that may or may not be stable.

The Gradle team creates a pre-release distribution called a release candidate (RC) for each minor or major release. When no problems are found after a short time (usually a week), the release candidate is promoted to a general availability (GA) release. If a regression is found in the release candidate, a new RC distribution is created, and the process repeats. Release candidates are supported for as long as the release window is open, but they are not intended to be used for production. Bug reports are greatly appreciated during the RC phase.

The Gradle team may create additional patch releases to replace the final release due to critical bug fixes or regressions. For instance, Gradle 5.2.1 replaces the Gradle 5.2 release.

Once a release candidate has been made, all feature development moves on to the next release for the latest major version. As such, each minor Gradle release causes the previous minor releases in the same major version to become end-of-life (EOL). EOL releases do not receive bug fixes or feature backports.

For major versions, Gradle will backport critical fixes and security fixes to the last minor in the previous major version. For example, when Gradle 7 was the latest major version, several releases were made in the 6.x line, including Gradle 6.9 (and subsequent releases).

As such, each major Gradle release causes:

-

The previous major version becomes maintenance only. It will only receive critical bug fixes and security fixes.

-

The major version before the previous one to become end-of-life (EOL), and that release line will not receive any new fixes.

RUNTIME AND CONFIGURATION

Command-Line Interface

The command-line interface is the primary method of interacting with Gradle.

The following is a reference for executing and customizing the Gradle command-line. It also serves as a reference when writing scripts or configuring continuous integration.

Use of the Gradle Wrapper is highly encouraged.

Substitute ./gradlew (in macOS / Linux) or gradlew.bat (in Windows) for gradle in the following examples.

Executing Gradle on the command-line conforms to the following structure:

gradle [taskName...] [--option-name...]Options are allowed before and after task names.

gradle [--option-name...] [taskName...]If multiple tasks are specified, you should separate them with a space.

gradle [taskName1 taskName2...] [--option-name...]Options that accept values can be specified with or without = between the option and argument. The use of = is recommended.

gradle [...] --console=plainOptions that enable behavior have long-form options with inverses specified with --no-. The following are opposites.

gradle [...] --build-cache

gradle [...] --no-build-cacheMany long-form options have short-option equivalents. The following are equivalent:

gradle --help

gradle -h|

Note

|

Many command-line flags can be specified in gradle.properties to avoid needing to be typed.

See the Configuring build environment guide for details.

|

Command-line usage

The following sections describe the use of the Gradle command-line interface.

Some plugins also add their own command line options.

For example, --tests, which is added by Java test filtering.

For more information on exposing command line options for your own tasks, see Declaring command-line options.

Executing tasks

You can learn about what projects and tasks are available in the project reporting section.

Most builds support a common set of tasks known as lifecycle tasks. These include the build, assemble, and check tasks.

To execute a task called myTask on the root project, type:

$ gradle :myTaskThis will run the single myTask and all of its dependencies.

Specify options for tasks

To pass an option to a task, prefix the option name with -- after the task name:

$ gradle :exampleTask --exampleOption=exampleValueDisambiguate task options from built-in options

Gradle does not prevent tasks from registering options that conflict with Gradle’s built-in options, like --profile or --help.

You can fix conflicting task options from Gradle’s built-in options with a -- delimiter before the task name in the command:

$ gradle [--built-in-option-name...] -- [taskName...] [--task-option-name...]Consider a task named mytask that accepts an option named profile:

-

In

gradle mytask --profile, Gradle accepts--profileas the built-in Gradle option. -

In

gradle -- mytask --profile=value, Gradle passes--profileas a task option.

Executing tasks in multi-project builds

In a multi-project build, subproject tasks can be executed with : separating the subproject name and task name.

The following are equivalent when run from the root project:

$ gradle :subproject:taskName$ gradle subproject:taskNameYou can also run a task for all subprojects using a task selector that consists of only the task name.

The following command runs the test task for all subprojects when invoked from the root project directory:

$ gradle testTo recap:

// Run a task in the root project only

$ gradle :exampleTask --exampleOption=exampleValue

// Run a task that may exist in the root or any subproject (ambiguous if defined in more than one)

$ gradle exampleTask --exampleOption=exampleValue

// Run a task in a specific subproject

$ gradle subproject:exampleTask --exampleOption=exampleValue

$ gradle :subproject:exampleTask --exampleOption=exampleValue|

Note

|

Some tasks selectors, like help or dependencies, will only run the task on the project they are invoked on and not on all the subprojects.

|

When invoking Gradle from within a subproject, the project name should be omitted:

$ cd subproject$ gradle taskName|

Tip

|

When executing the Gradle Wrapper from a subproject directory, reference gradlew relatively. For example: ../gradlew taskName.

|

Executing multiple tasks

You can also specify multiple tasks. The tasks' dependencies determine the precise order of execution, and a task having no dependencies may execute earlier than it is listed on the command-line.

For example, the following will execute the test and deploy tasks in the order that they are listed on the command-line and will also execute the dependencies for each task.

$ gradle test deployCommand line order safety

Although Gradle will always attempt to execute the build quickly, command line ordering safety will also be honored.

For example, the following will

execute clean and build along with their dependencies:

$ gradle clean buildHowever, the intention implied in the command line order is that clean should run first and then build. It would be incorrect to execute clean after build, even if doing so would cause the build to execute faster since clean would remove what build created.

Conversely, if the command line order was build followed by clean, it would not be correct to execute clean before build. Although Gradle will execute the build as quickly as possible, it will also respect the safety of the order of tasks specified on the command line and ensure that clean runs before build when specified in that order.

Note that command line order safety relies on tasks properly declaring what they create, consume, or remove.

Excluding tasks from execution

You can exclude a task from being executed using the -x or --exclude-task command-line option and providing the name of the task to exclude:

$ gradle dist --exclude-task test> Task :compile compiling source > Task :dist building the distribution BUILD SUCCESSFUL in 0s 2 actionable tasks: 2 executed

You can see that the test task is not executed, even though the dist task depends on it.

The test task’s dependencies, such as compileTest, are not executed either.

The dependencies of test that other tasks depend on, such as compile, are still executed.

Forcing tasks to execute

You can force Gradle to execute all tasks ignoring up-to-date checks using the --rerun-tasks option:

$ gradle test --rerun-tasksThis will force test and all task dependencies of test to execute. It is similar to running gradle clean test, but without the build’s generated output being deleted.

Alternatively, you can tell Gradle to rerun a specific task using the --rerun built-in task option.

Continue the build after a task failure

By default, Gradle aborts execution and fails the build when any task fails. This allows the build to complete sooner and prevents cascading failures from obfuscating the root cause of an error.

You can use the --continue option to force Gradle to execute every task when a failure occurs:

$ gradle test --continueWhen executed with --continue, Gradle executes every task in the build if all the dependencies for that task are completed without failure.

For example, tests do not run if there is a compilation error in the code under test because the test task depends on the compilation task.

Gradle outputs each of the encountered failures at the end of the build.

|

Note

|

If any tests fail, many test suites fail the entire test task.

Code coverage and reporting tools frequently run after the test task, so "fail fast" behavior may halt execution before those tools run.

|

Name abbreviation

When you specify tasks on the command-line, you don’t have to provide the full name of the task.

You can provide enough of the task name to identify the task uniquely.

For example, it is likely gradle che is enough for Gradle to identify the check task.

The same applies to project names. You can execute the check task in the library subproject with the gradle lib:che command.

You can use camel case patterns for more complex abbreviations. These patterns are expanded to match camel case and kebab case names.

For example, the pattern foBa (or fB) matches fooBar and foo-bar.

More concretely, you can run the compileTest task in the my-awesome-library subproject with the command gradle mAL:cT.

$ gradle mAL:cT> Task :my-awesome-library:compileTest compiling unit tests BUILD SUCCESSFUL in 0s 1 actionable task: 1 executed

Abbreviations can also be used with the -x command-line option.

Tracing name expansion

For complex projects, it might be ambiguous if the intended tasks were executed. When using abbreviated names, a single typo can lead to the execution of unexpected tasks.

When INFO, or more verbose logging is enabled, the output will contain extra information about the project and task name expansion.

For example, when executing the mAL:cT command on the previous example, the following log messages will be visible:

No exact project with name ':mAL' has been found. Checking for abbreviated names. Found exactly one project that matches the abbreviated name ':mAL': ':my-awesome-library'. No exact task with name ':cT' has been found. Checking for abbreviated names. Found exactly one task name, that matches the abbreviated name ':cT': ':compileTest'.

Common tasks

The following are task conventions applied by built-in and most major Gradle plugins.

Computing all outputs

It is common in Gradle builds for the build task to designate assembling all outputs and running all checks:

$ gradle buildRunning applications

It is common for applications to run with the run task, which assembles the application and executes some script or binary:

$ gradle runRunning all checks

It is common for all verification tasks, including tests and linting, to be executed using the check task:

$ gradle checkCleaning outputs

You can delete the contents of the build directory using the clean task. Doing so will cause pre-computed outputs to be lost, causing significant additional build time for the subsequent task execution:

$ gradle cleanProject reporting

Gradle provides several built-in tasks which show particular details of your build. This can be useful for understanding your build’s structure and dependencies, as well as debugging problems.

Listing projects

Running the projects task gives you a list of the subprojects of the selected project, displayed in a hierarchy:

$ gradle projectsYou also get a project report with Build Scan.

Listing tasks

Running gradle tasks gives you a list of the main tasks of the selected project. This report shows the default tasks for the project, if any, and a description for each task:

$ gradle tasksBy default, this report shows only those tasks assigned to a task group.

Groups (such as verification, publishing, help, build…) are available as the header of each section when listing tasks:

> Task :tasks

Build tasks

-----------

assemble - Assembles the outputs of this project.

Build Setup tasks

-----------------

init - Initializes a new Gradle build.

Distribution tasks

------------------

assembleDist - Assembles the main distributions

Documentation tasks

-------------------

javadoc - Generates Javadoc API documentation for the main source code.You can obtain more information in the task listing using the --all option:

$ gradle tasks --allThe option --no-all can limit the report to tasks assigned to a task group.

If you need to be more precise, you can display only the tasks from a specific group using the --group option:

$ gradle tasks --group="build setup"Show task usage details

Running gradle help --task someTask gives you detailed information about a specific task:

$ gradle -q help --task libsDetailed task information for libs

Paths

:api:libs

:webapp:libs

Type

Task (org.gradle.api.Task)

Options

--rerun Causes the task to be re-run even if up-to-date.

Description

Builds the JAR

Group

build

This information includes the full task path, the task type, possible task-specific command line options, and the description of the given task.

You can get detailed information about the task class types using the --types option or using --no-types to hide this information.

Reporting dependencies

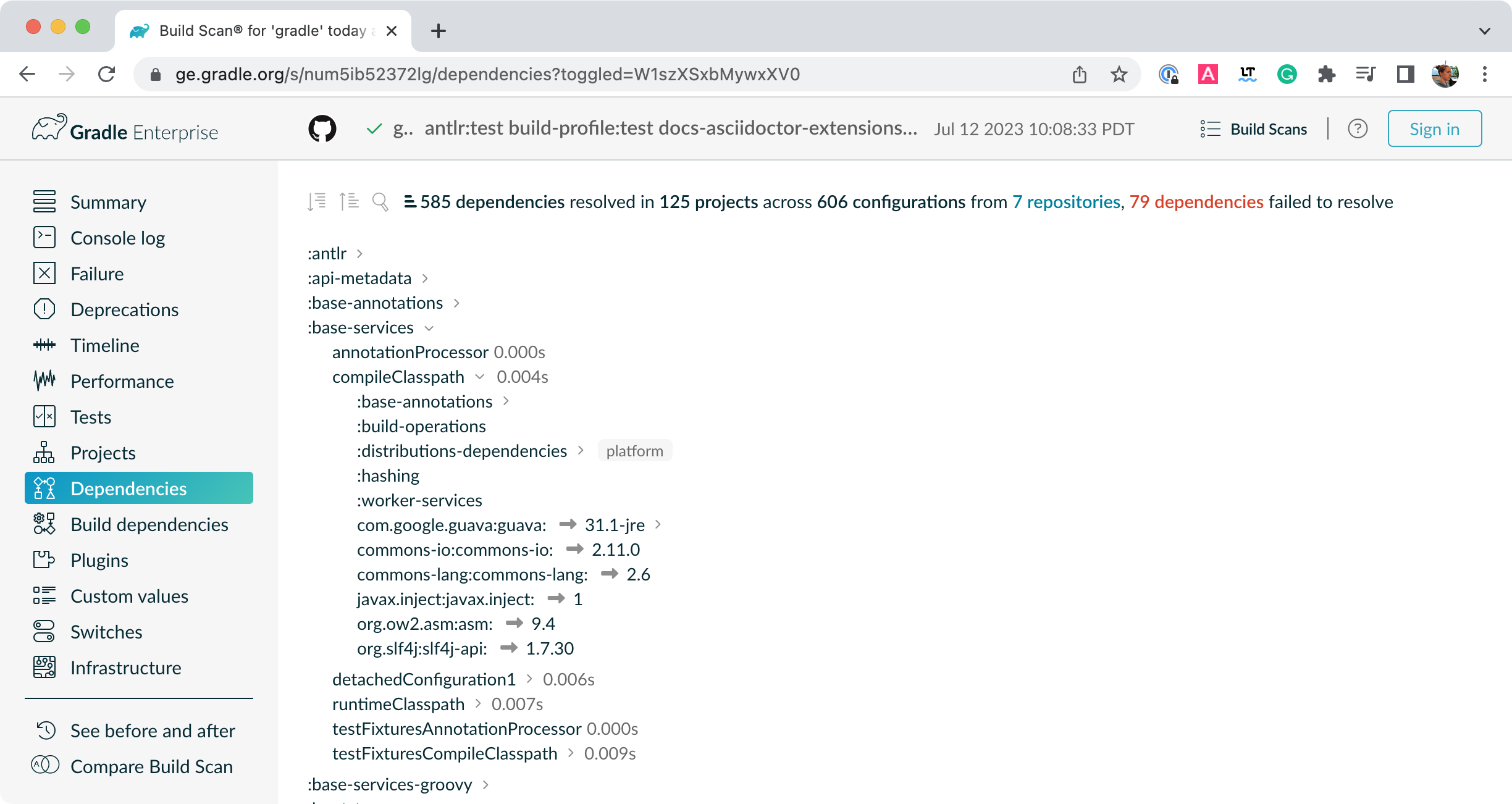

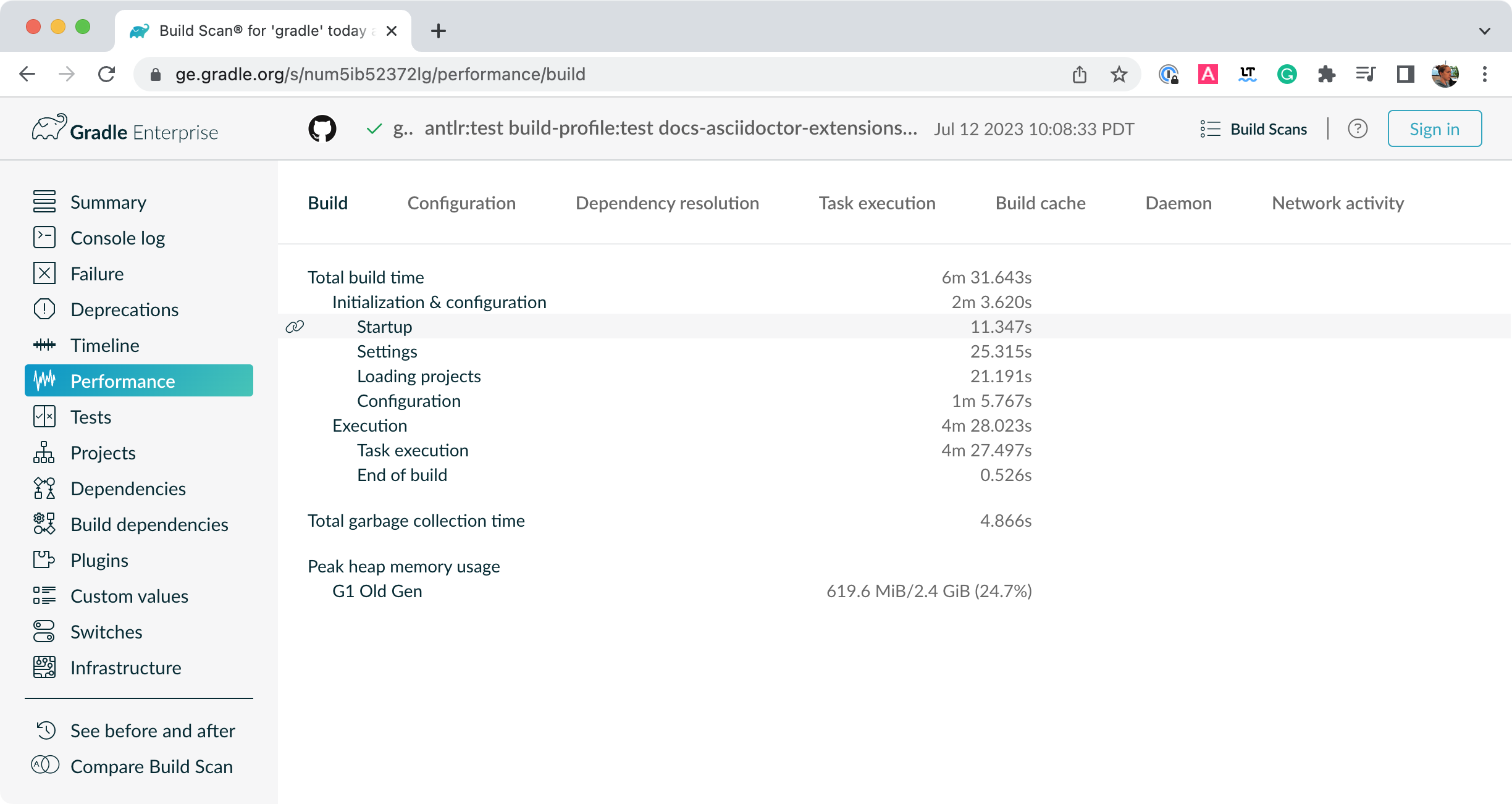

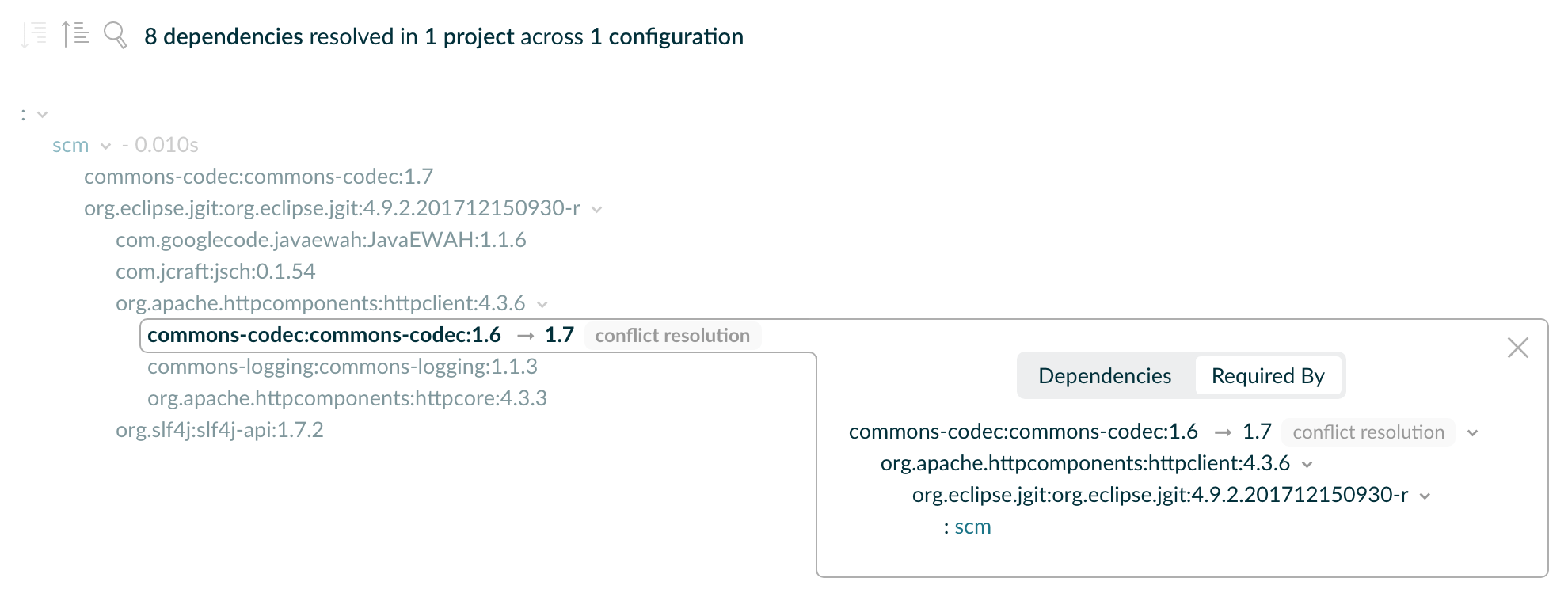

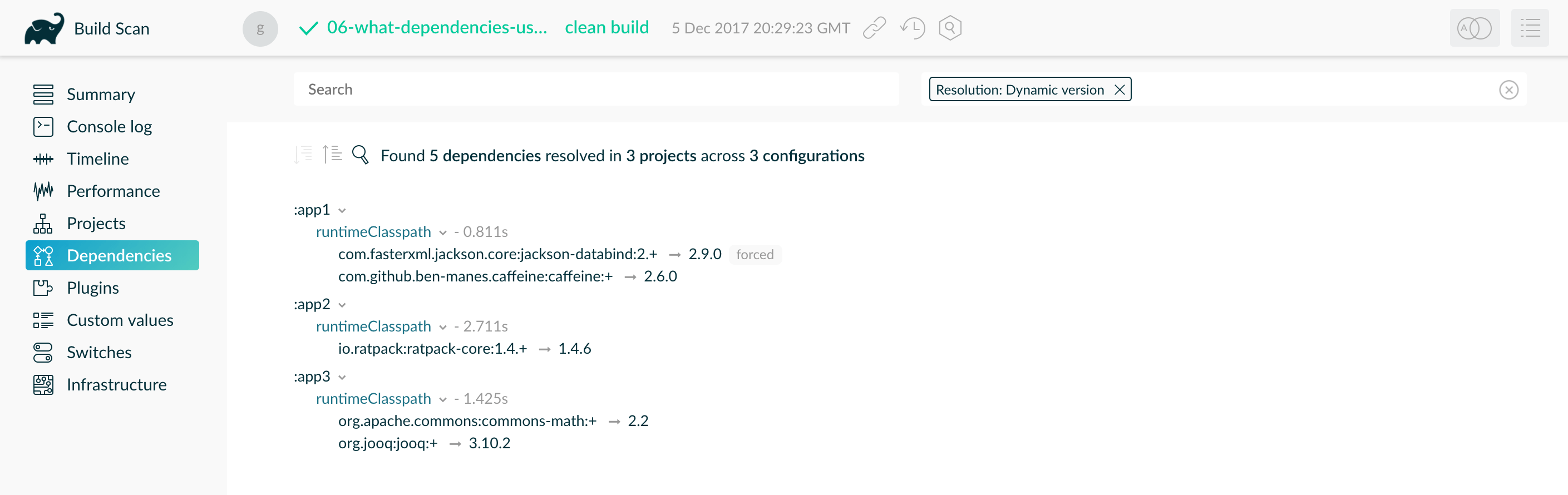

Build Scan gives a full, visual report of what dependencies exist on which configurations, transitive dependencies, and dependency version selection.

They can be invoked using the --scan options:

$ gradle myTask --scanThis will give you a link to a web-based report, where you can find dependency information like this:

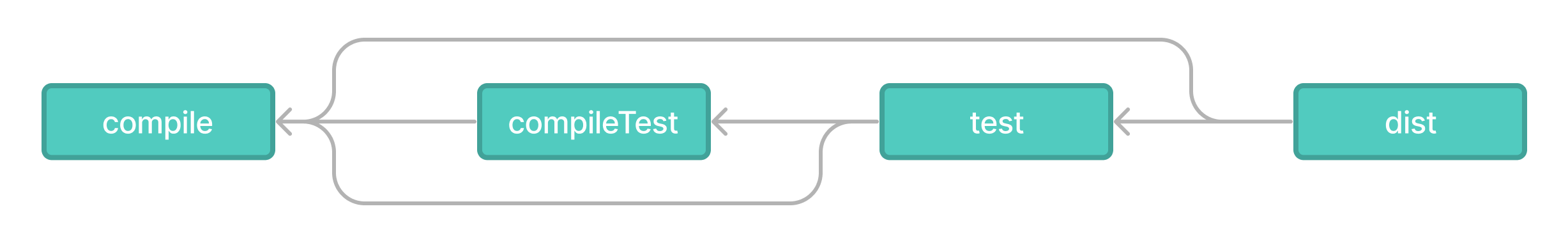

Listing project dependencies

Running the dependencies task gives you a list of the dependencies of the selected project, broken down by configuration. For each configuration, the direct and transitive dependencies of that configuration are shown in a tree.

Below is an example of this report:

$ gradle dependencies> Task :app:dependencies

------------------------------------------------------------

Project ':app'

------------------------------------------------------------

compileClasspath - Compile classpath for source set 'main'.

+--- project :model

| \--- org.json:json:20220924

+--- com.google.inject:guice:5.1.0

| +--- javax.inject:javax.inject:1

| +--- aopalliance:aopalliance:1.0

| \--- com.google.guava:guava:30.1-jre -> 28.2-jre

| +--- com.google.guava:failureaccess:1.0.1

| +--- com.google.guava:listenablefuture:9999.0-empty-to-avoid-conflict-with-guava

| +--- com.google.code.findbugs:jsr305:3.0.2

| +--- org.checkerframework:checker-qual:2.10.0 -> 3.28.0

| +--- com.google.errorprone:error_prone_annotations:2.3.4

| \--- com.google.j2objc:j2objc-annotations:1.3

+--- com.google.inject:guice:{strictly 5.1.0} -> 5.1.0 (c)

+--- org.json:json:{strictly 20220924} -> 20220924 (c)

+--- javax.inject:javax.inject:{strictly 1} -> 1 (c)

+--- aopalliance:aopalliance:{strictly 1.0} -> 1.0 (c)

+--- com.google.guava:guava:{strictly [28.0-jre, 28.5-jre]} -> 28.2-jre (c)

+--- com.google.guava:guava:{strictly 28.2-jre} -> 28.2-jre (c)

+--- com.google.guava:failureaccess:{strictly 1.0.1} -> 1.0.1 (c)

+--- com.google.guava:listenablefuture:{strictly 9999.0-empty-to-avoid-conflict-with-guava} -> 9999.0-empty-to-avoid-conflict-with-guava (c)

+--- com.google.code.findbugs:jsr305:{strictly 3.0.2} -> 3.0.2 (c)

+--- org.checkerframework:checker-qual:{strictly 3.28.0} -> 3.28.0 (c)

+--- com.google.errorprone:error_prone_annotations:{strictly 2.3.4} -> 2.3.4 (c)

\--- com.google.j2objc:j2objc-annotations:{strictly 1.3} -> 1.3 (c)Concrete examples of build scripts and output available in Viewing and debugging dependencies.

Running the buildEnvironment task visualises the buildscript dependencies of the selected project, similarly to how gradle dependencies visualizes the dependencies of the software being built:

$ gradle buildEnvironmentRunning the dependencyInsight task gives you an insight into a particular dependency (or dependencies) that match specified input:

$ gradle dependencyInsight --dependency [...] --configuration [...]The --configuration parameter restricts the report to a particular configuration such as compileClasspath.

Listing project properties

Running the properties task gives you a list of the properties of the selected project:

$ gradle -q api:properties------------------------------------------------------------ Project ':api' ------------------------------------------------------------ allprojects: [project ':api'] ant: org.gradle.api.internal.project.DefaultAntBuilder@12345 antBuilderFactory: org.gradle.api.internal.project.DefaultAntBuilderFactory@12345 artifacts: org.gradle.api.internal.artifacts.dsl.DefaultArtifactHandler_Decorated@12345 asDynamicObject: DynamicObject for project ':api' baseClassLoaderScope: org.gradle.api.internal.initialization.DefaultClassLoaderScope@12345

You can also query a single property with the optional --property argument:

$ gradle -q api:properties --property allprojects------------------------------------------------------------ Project ':api' ------------------------------------------------------------ allprojects: [project ':api']

Command-line completion

Gradle provides bash and zsh tab completion support for tasks, options, and Gradle properties through gradle-completion (installed separately):

Debugging options

-?,-h,--help-

Shows a help message with the built-in CLI options. To show project-contextual options, including help on a specific task, see the

helptask. -v,--version-

Prints Gradle, Groovy, Ant, Launcher & Daemon JVM, and operating system version information and exit without executing any tasks.

-V,--show-version-

Prints Gradle, Groovy, Ant, Launcher & Daemon JVM, and operating system version information and continue execution of specified tasks.

-S,--full-stacktrace-

Print out the full (very verbose) stacktrace for any exceptions. See also logging options.

-s,--stacktrace-

Print out the stacktrace also for user exceptions (e.g. compile error). See also logging options.

--scan-

Create a Build Scan with fine-grained information about all aspects of your Gradle build.

-Dorg.gradle.debug=true-

A Gradle property that debugs the Gradle Daemon process. Gradle will wait for you to attach a debugger at

localhost:5005by default. -Dorg.gradle.debug.host=(host address)-

A Gradle property that specifies the host address to listen on or connect to when debug is enabled. In the server mode on Java 9 and above, passing

*for the host will make the server listen on all network interfaces. By default, no host address is passed to JDWP, so on Java 9 and above, the loopback address is used, while earlier versions listen on all interfaces. -Dorg.gradle.debug.port=(port number)-

A Gradle property that specifies the port number to listen on when debug is enabled. Default is

5005. -Dorg.gradle.debug.server=(true,false)-

A Gradle property that if set to

trueand debugging is enabled, will cause Gradle to run the build with the socket-attach mode of the debugger. Otherwise, the socket-listen mode is used. Default istrue. -Dorg.gradle.debug.suspend=(true,false)-

A Gradle property that if set to

trueand debugging is enabled, the JVM running Gradle will suspend until a debugger is attached. Default istrue.

Performance options

Try these options when optimizing and improving build performance.

Many of these options can be specified in the gradle.properties file, so command-line flags are unnecessary.

--build-cache,--no-build-cache-

Toggles the Gradle Build Cache. Gradle will try to reuse outputs from previous builds. Default is off.

--configuration-cache,--no-configuration-cache-

Toggles the Configuration Cache. Gradle will try to reuse the build configuration from previous builds. Default is off.

--configuration-cache-problems=(fail,warn)-

Configures how the configuration cache handles problems. Default is

fail.Set to

warnto report problems without failing the build.Set to

failto report problems and fail the build if there are any problems. --configure-on-demand,--no-configure-on-demandIncubating-

Toggles configure-on-demand. Only relevant projects are configured in this build run. Default is off.

--max-workers-

Sets the maximum number of workers that Gradle may use. Default is number of processors.

--parallel,--no-parallel-

Build projects in parallel. For limitations of this option, see Parallel Project Execution. Default is off.

--priority-

Specifies the scheduling priority for the Gradle daemon and all processes launched by it. Values are

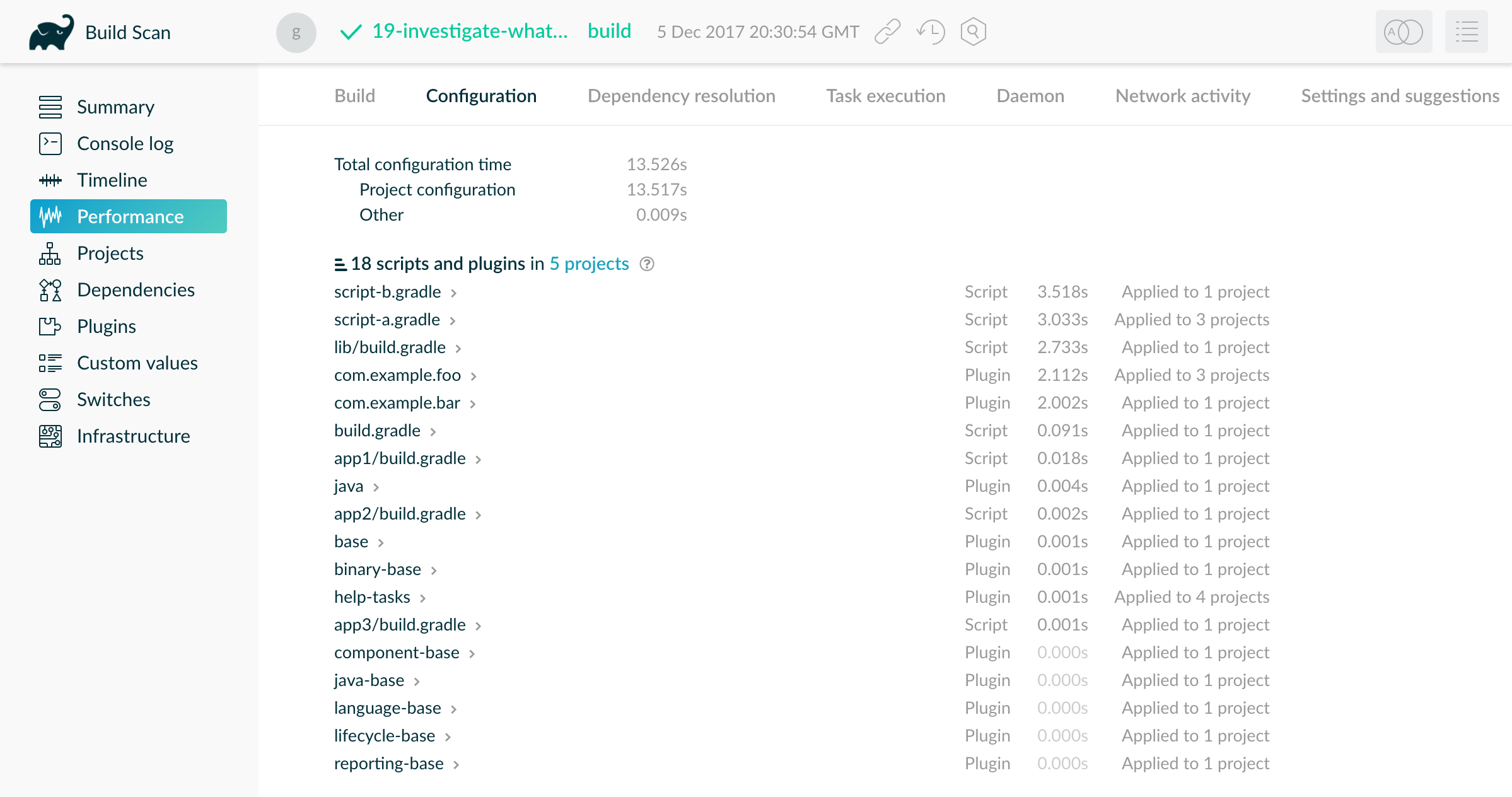

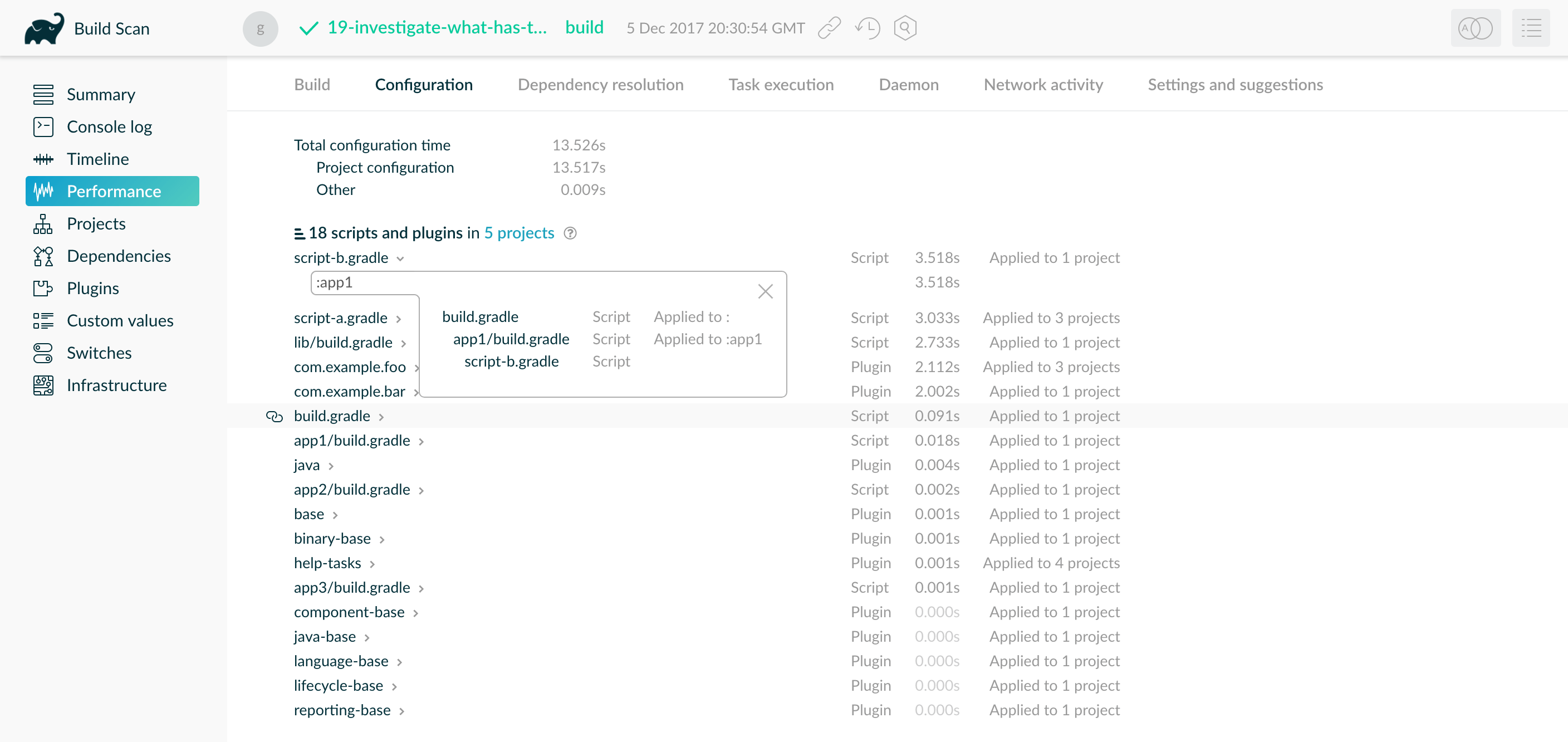

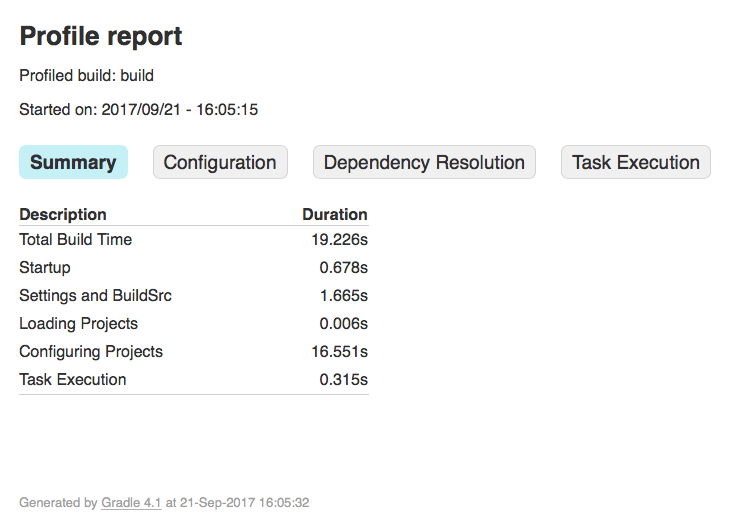

normalorlow. Default is normal. --profile-

Generates a high-level performance report in the

layout.buildDirectory.dir("reports/profile")directory.--scanis preferred. --scan-

Generate a Build Scan with detailed performance diagnostics.

--watch-fs,--no-watch-fs-

Toggles watching the file system. When enabled, Gradle reuses information it collects about the file system between builds. Enabled by default on operating systems where Gradle supports this feature.

Gradle daemon options

You can manage the Gradle Daemon through the following command line options.

--daemon,--no-daemon-

Use the Gradle Daemon to run the build. Starts the daemon if not running or the existing daemon is busy. Default is on.

--foreground-

Starts the Gradle Daemon in a foreground process.

--status(Standalone command)-

Run

gradle --statusto list running and recently stopped Gradle daemons. It only displays daemons of the same Gradle version. --stop(Standalone command)-

Run

gradle --stopto stop all Gradle Daemons of the same version. -Dorg.gradle.daemon.idletimeout=(number of milliseconds)-

A Gradle property wherein the Gradle Daemon will stop itself after this number of milliseconds of idle time. Default is 10800000 (3 hours).

Logging options

Setting log level

You can customize the verbosity of Gradle logging with the following options, ordered from least verbose to most verbose.

-Dorg.gradle.logging.level=(quiet,warn,lifecycle,info,debug)-

A Gradle property that sets the logging level.

-q,--quiet-

Log errors only.

-w,--warn-

Set log level to warn.

-i,--info-

Set log level to info.

-d,--debug-

Log in debug mode (includes normal stacktrace).

Lifecycle is the default log level.

Customizing log format

You can control the use of rich output (colors and font variants) by specifying the console mode in the following ways:

-Dorg.gradle.console=(auto,plain,colored,rich,verbose)-

A Gradle property that specifies the console mode. Different modes are described immediately below.

--console=(auto,plain,colored,rich,verbose)-

Specifies which type of console output to generate.

Set to

plainto generate plain text only. This option disables all color and other rich output in the console output. This is the default when Gradle is not attached to a terminal.Set to

coloredto generate colored output without rich status information such as progress bars.Set to

auto(the default) to enable color and other rich output in the console output when the build process is attached to a console or to generate plain text only when not attached to a console. This is the default when Gradle is attached to a terminal.Set to

richto enable color and other rich output in the console output, regardless of whether the build process is not attached to a console. When not attached to a console, the build output will use ANSI control characters to generate the rich output.Set to

verboseto enable color and other rich output likerichwith output task names and outcomes at the lifecycle log level, (as is done by default in Gradle 3.5 and earlier).

Reporting problems

--problems-report(enabled by default) Incubating-

Enable the generation of

build/reports/problems-report.html. This is the default behaviour. The report is generated with problems provided to the Problems API. --no-problems-reportIncubating-

Disable the generation of

build/reports/problems-report.html, by default this report is generated with problems provided to the Problems API.

Showing or hiding warnings

By default, Gradle won’t display all warnings (e.g. deprecation warnings). Instead, Gradle will collect them and render a summary at the end of the build like:

Deprecated Gradle features were used in this build, making it incompatible with Gradle 5.0.You can control the verbosity of warnings on the console with the following options:

-Dorg.gradle.warning.mode=(all,fail,none,summary)-

A Gradle property that specifies the warning mode. Different modes are described immediately below.

--warning-mode=(all,fail,none,summary)-

Specifies how to log warnings. Default is

summary.Set to

allto log all warnings.Set to

failto log all warnings and fail the build if there are any warnings.Set to

summaryto suppress all warnings and log a summary at the end of the build.Set to

noneto suppress all warnings, including the summary at the end of the build.

Rich console

Gradle’s rich console displays extra information while builds are running.

Features:

-

Progress bar and timer visually describe the overall status

-

Parallel work-in-progress lines below describe what is happening now

-

Colors and fonts are used to highlight significant output and errors

Execution options

The following options affect how builds are executed by changing what is built or how dependencies are resolved.

--include-build-

Run the build as a composite, including the specified build.

--offline-

Specifies that the build should operate without accessing network resources.

-U,--refresh-dependencies-

Refresh the state of dependencies.

--continue-

Continue task execution after a task failure.

-m,--dry-run-

Run Gradle with all task actions disabled. Use this to show which task would have executed.

--task-graphSince 9.1.0 Incubating-

Run Gradle with all task actions disabled and print the task dependency graph.

-t,--continuous-

Enables continuous build. Gradle does not exit and will re-execute tasks when task file inputs change.

--write-locks-

Indicates that all resolved configurations that are lockable should have their lock state persisted.

--update-locks <group:name>[,<group:name>]*-

Indicates that versions for the specified modules have to be updated in the lock file.

This flag also implies

--write-locks. -a,--no-rebuild-

Do not rebuild project dependencies. Useful for debugging and fine-tuning

buildSrc, but can lead to wrong results. Use with caution!

Dependency verification options

Learn more about this in dependency verification.

-F=(strict,lenient,off),--dependency-verification=(strict,lenient,off)-

Configures the dependency verification mode.

The default mode is

strict. -M,--write-verification-metadata-

Generates checksums for dependencies used in the project (comma-separated list) for dependency verification.

--refresh-keys-

Refresh the public keys used for dependency verification.

--export-keys-

Exports the public keys used for dependency verification.

Environment options

You can customize many aspects of build scripts, settings, caches, and so on through the options below.

-g,--gradle-user-home-

Specifies the Gradle User Home directory. The default is the

.gradledirectory in the user’s home directory. -p,--project-dir-

Specifies the start directory for Gradle. Defaults to current directory.

--project-cache-dir-

Specifies the project-specific cache directory. Default value is

.gradlein the root project directory. -D,--system-prop-

Sets a system property of the JVM, for example

-Dmyprop=myvalue. -I,--init-script-

Specifies an initialization script.

-P,--project-prop-

Sets a project property of the root project, for example

-Pmyprop=myvalue. -Dorg.gradle.jvmargs-

A Gradle property that sets JVM arguments.

-Dorg.gradle.java.home-

A Gradle property that sets the JDK home dir.

Task options

Tasks may define task-specific options which are different from most of the global options described in the sections above (which are interpreted by Gradle itself, can appear anywhere in the command line, and can be listed using the --help option).

Task options:

-

Are consumed and interpreted by the tasks themselves;

-

Must be specified immediately after the task in the command-line;

-

May be listed using

gradle help --task someTask(see Show task usage details).

To learn how to declare command-line options for your own tasks, see Declaring and Using Command Line Options.

Built-in task options

Built-in task options are options available as task options for all tasks. At this time, the following built-in task options exist:

--rerun-

Causes the task to be rerun even if up-to-date. Similar to

--rerun-tasks, but for a specific task.

Bootstrapping new projects

Creating new Gradle builds

Use the built-in gradle init task to create a new Gradle build, with new or existing projects.

$ gradle initMost of the time, a project type is specified.

Available types include basic (default), java-library, java-application, and more.

See init plugin documentation for details.

$ gradle init --type java-libraryStandardize and provision Gradle

The built-in gradle wrapper task generates a script, gradlew, that invokes a declared version of Gradle, downloading it beforehand if necessary.

$ gradle wrapper --gradle-version=8.1You can also specify --distribution-type=(bin|all), --gradle-distribution-url, --gradle-distribution-sha256-sum in addition to --gradle-version.

Full details on using these options are documented in the Gradle wrapper section.

Continuous build

Continuous Build allows you to automatically re-execute the requested tasks when file inputs change.

You can execute the build in this mode using the -t or --continuous command-line option.

Learn more in Continuous Builds.

Logging and Output

The build log is the primary way Gradle communicates what’s happening during a build. Clear logging helps you quickly understand your build status, identify issues, and troubleshoot effectively. Too much noise in the logs can obscure important warnings or errors.

Gradle provides flexible logging controls, enabling you to adjust verbosity and detail according to your needs.

Gradle Log levels

Gradle defines six primary log levels:

| Level | Description |

|---|---|

|

Error messages |

|

Important information messages |

|

Warning messages |

|

Progress information messages |

|

Information messages |

|

Debug messages |

The default logging level is LIFECYCLE, providing progress updates without overwhelming detail.

Choosing and setting a log level

You can set the log level either through command-line options or by configuring the gradle.properties file.

| CLI Option | Property | Outputs Log Levels |

|---|---|---|

|

|

QUIET and higher |

|

|

WARN and higher |

no logging options |

LIFECYCLE and higher |

|

|

|

INFO and higher |

|

|

DEBUG and higher (all log messages) |

For example, to set a consistent log level in your project’s gradle.properties file:

org.gradle.logging.level=infoSimilarly on the command line:

$ ./gradlew run --infoYou can emit log messages directly from build scripts and tasks using Gradle’s built-in logger:

tasks.register("logtask") {

doLast {

logger.lifecycle("Lifecycle: Build progress info.")

logger.info("Info: Additional insights.")

logger.debug("Debug: Detailed troubleshooting info.")

}

}tasks.register('logtask') {

doLast {

logger.lifecycle('Lifecycle: Build progress info.')

logger.info('Info: Additional insights.')

logger.debug('Debug: Detailed troubleshooting info.')

}

}Use appropriate log levels (lifecycle, info, debug) to ensure your build output is clear and informative.

|

Caution

|

The DEBUG log level can expose sensitive security information to the console.

|

Stacktrace options

Stacktraces are useful for diagnosing issues during a build failure. You can control stacktrace output via command-line options or properties:

| CLI Option | Gradle Property | Stacktrace Shown |

|---|---|---|

|

|

Truncated stacktraces are printed. We recommend this over full stacktraces. Groovy full stacktraces are extremely verbose due to the underlying dynamic invocation mechanisms. Yet they usually do not contain relevant information about what has gone wrong in your code. This option renders stacktraces for deprecation warnings. |

|

|

The full stacktraces are printed out. This option renders stacktraces for deprecation warnings. |

(none) |

(none) |

No stacktraces are printed to the console in case of a build error (e.g., a compile error). Only in case of internal exceptions will stacktraces be printed. If the |

For example, to always display a full stacktrace on build errors, set in gradle.properties:

org.gradle.logging.stacktrace=fullLogging Sensitive Information

Running Gradle with the DEBUG log level can potentially expose sensitive information to the console and build log.

This information might include:

-

Environment variables

-

Private repository credentials

-

Build cache and Develocity credentials

-

Plugin Portal publishing credentials

It’s important to avoid using the DEBUG log level when running on public Continuous Integration (CI) services.

Build logs on these services are accessible to the public and can expose sensitive information.

Even on private CI services, logging sensitive credentials may pose a risk depending on your organization’s threat model.

It’s advisable to discuss this with your organization’s security team.

Some CI providers attempt to redact sensitive credentials from logs, but this process is not foolproof and typically only redacts exact matches of pre-configured secrets.

If you suspect that a Gradle Plugin may inadvertently expose sensitive information, please contact our security team for assistance with disclosure.

Custom log messages

A simple option for logging in your build file is to write messages to standard output.

Gradle redirects anything written to standard output to its logging system at the QUIET log level:

println("A message which is logged at QUIET level")println 'A message which is logged at QUIET level'Gradle also provides a logger property to a build script, which is an instance of Logger.

This interface extends the SLF4J Logger interface and adds a few Gradle-specific methods.

Below is an example of how this is used in the build script:

logger.quiet("An info log message which is always logged.")

logger.error("An error log message.")

logger.warn("A warning log message.")

logger.lifecycle("A lifecycle info log message.")

logger.info("An info log message.")

logger.debug("A debug log message.")

logger.trace("A trace log message.") // Gradle never logs TRACE level logslogger.quiet('An info log message which is always logged.')

logger.error('An error log message.')

logger.warn('A warning log message.')

logger.lifecycle('A lifecycle info log message.')

logger.info('An info log message.')

logger.debug('A debug log message.')

logger.trace('A trace log message.') // Gradle never logs TRACE level logsUse the link typical SLF4J pattern to replace a placeholder with an actual value in the log message.

logger.info("A {} log message", "info")logger.info('A {} log message', 'info')You can also hook into Gradle’s logging system from within other classes used in the build (classes from the buildSrc directory, for example) with an SLF4J logger.

You can use this logger the same way as you use the provided logger in the build script.

import org.slf4j.LoggerFactory

val slf4jLogger = LoggerFactory.getLogger("some-logger")

slf4jLogger.info("An info log message logged using SLF4j")import org.slf4j.LoggerFactory

def slf4jLogger = LoggerFactory.getLogger('some-logger')

slf4jLogger.info('An info log message logged using SLF4j')Logging from external tools and libraries

Internally, Gradle uses Ant and Ivy. Both have their own logging system. Gradle redirects their logging output into the Gradle logging system.

There is a 1:1 mapping from the Ant/Ivy log levels to the Gradle log levels, except the Ant/Ivy TRACE log level, which is mapped to the Gradle DEBUG log level.

This means the default Gradle log level will not show any Ant/Ivy output unless it is an error or a warning.

Many tools out there still use the standard output for logging.

By default, Gradle redirects standard output to the QUIET log level and standard error to the ERROR level.

This behavior is configurable.

The project object provides a LoggingManager, which allows you to change the log levels that standard out or error are redirected to when your build script is evaluated.

logging.captureStandardOutput(LogLevel.INFO)

println("A message which is logged at INFO level")logging.captureStandardOutput LogLevel.INFO

println 'A message which is logged at INFO level'To change the log level for standard out or error during task execution, use a LoggingManager.

tasks.register("logInfo") {

logging.captureStandardOutput(LogLevel.INFO)

doFirst {

println("A task message which is logged at INFO level")

}

}tasks.register('logInfo') {

logging.captureStandardOutput LogLevel.INFO

doFirst {

println 'A task message which is logged at INFO level'

}

}Gradle also integrates with the Java Util Logging, Jakarta Commons Logging and Log4j logging toolkits. Any log messages your build classes write using these logging toolkits will be redirected to Gradle’s logging system.

Changing what Gradle logs

|

Warning

|

This feature is deprecated and will be removed in the next major version without a replacement. The configuration cache limits the ability to customize Gradle’s logging UI. The custom logger can only implement supported listener interfaces. These interfaces do not receive events when the configuration cache entry is reused because the configuration phase is skipped. |

You can replace much of Gradle’s logging UI with your own. You could do this if you want to customize the UI somehow - to log more or less information or to change the formatting. Simply replace the logging using the Gradle.useLogger(java.lang.Object) method. This is accessible from a build script, an init script, or via the embedding API. Note that this completely disables Gradle’s default output. Below is an example init script that changes how task execution and build completion are logged:

useLogger(CustomEventLogger())

@Suppress("deprecation")

class CustomEventLogger() : BuildAdapter(), TaskExecutionListener {

override fun beforeExecute(task: Task) {

println("[${task.name}]")

}

override fun afterExecute(task: Task, state: TaskState) {

println()

}

override fun buildFinished(result: BuildResult) {

println("build completed")

if (result.failure != null) {

(result.failure as Throwable).printStackTrace()

}

}

}useLogger(new CustomEventLogger())

@SuppressWarnings("deprecation")

class CustomEventLogger extends BuildAdapter implements TaskExecutionListener {

void beforeExecute(Task task) {

println "[$task.name]"

}

void afterExecute(Task task, TaskState state) {

println()

}

void buildFinished(BuildResult result) {

println 'build completed'

if (result.failure != null) {

result.failure.printStackTrace()

}

}

}$ ./gradlew -I customLogger.init.gradle.kts build > Task :compile [compile] compiling source > Task :testCompile [testCompile] compiling test source > Task :test [test] running unit tests > Task :build [build] build completed 3 actionable tasks: 3 executed

$ ./gradlew -I customLogger.init.gradle build > Task :compile [compile] compiling source > Task :testCompile [testCompile] compiling test source > Task :test [test] running unit tests > Task :build [build] build completed 3 actionable tasks: 3 executed

Your logger can implement any of the listener interfaces listed below. When you register a logger, only the logging for the interfaces it implements is replaced. Logging for the other interfaces is left untouched. You can find out more about the listener interfaces in Build lifecycle events.

Gradle Wrapper

The recommended way to execute any Gradle build is with the help of the Gradle Wrapper (referred to as "Wrapper").

The Wrapper is a script (called gradlew or gradlew.bat) that invokes a declared version of Gradle, downloading it beforehand if necessary.

Instead of running gradle build using the installed Gradle, you use the Gradle Wrapper by calling ./gradlew build.

The Gradle Wrapper isn’t distributed as a standalone download—it’s created using the gradle wrapper task.

There are three ways to use the Wrapper:

-

Adding the Wrapper - You set up a new Gradle project and add the Wrapper to it.

-

Using the Wrapper - You run a project with the Wrapper that already provides it.

-

Upgrading the Wrapper - You upgrade the Wrapper to a new version of Gradle.

When using the Wrapper instead of the installed Gradle, you gain the following benefits:

-

Standardizes a project on a given Gradle version for more reliable and robust builds.

-

Provisioning the Gradle version for different users is done with a simple Wrapper definition change.

-

Provisioning the Gradle version for different execution environments (e.g., IDEs or Continuous Integration servers) is done with a simple Wrapper definition change.

The following sections explain each of these use cases in more detail.

1. Adding the Gradle Wrapper

The Gradle Wrapper is not something you download.

Generating the Wrapper files requires an installed version of the Gradle runtime on your machine as described in Installation. Thankfully, generating the initial Wrapper files is a one-time process.

Every vanilla Gradle build comes with a built-in task called wrapper.

The task is listed under the group "Build Setup tasks" when listing the tasks.

Executing the wrapper task generates the necessary Wrapper files in the project directory:

$ gradle wrapper> Task :wrapper BUILD SUCCESSFUL in 0s 1 actionable task: 1 executed

|

Tip

|

To make the Wrapper files available to other developers and execution environments, you need to check them into version control. Wrapper files, including the JAR file, are small. Adding the JAR file to version control is expected. Some organizations do not allow projects to submit binary files to version control, and there is no workaround available. |

The generated Wrapper properties file, gradle/wrapper/gradle-wrapper.properties, stores the information about the Gradle distribution:

-

The server hosting the Gradle distribution.

-

The type of Gradle distribution. By default, the

-bindistribution contains only the runtime but no sample code and documentation. -

The Gradle version used for executing the build. By default, the

wrappertask picks the same Gradle version used to generate the Wrapper files. -

Optionally, a timeout in ms used when downloading the Gradle distribution.

-

Optionally, a boolean to set the validation of the distribution URL.

The following is an example of the generated distribution URL in gradle/wrapper/gradle-wrapper.properties:

distributionUrl=https\://services.gradle.org/distributions/gradle-9.3.1-bin.zip|

Tip

|

Use the The |

All of those aspects are configurable at the time of generating the Wrapper files with the help of the following command line options:

--gradle-version-

The Gradle version used for downloading and executing the Wrapper. The resulting distribution URL is validated before it is written to the properties file.

For Gradle versions starting with major version 9, the version can be specified using only the major or minor version number. In such cases, the latest normal release matching that major or minor version will be used. For example,

9resolves to the latest9.x.yrelease, and9.1resolves to the latest9.1.xrelease.The following labels are allowed:

--distribution-type-

The Gradle distribution type used for the Wrapper. Available options are

binandall. The default value isbin. --gradle-distribution-url-

The full URL pointing to the Gradle distribution ZIP file. This option makes

--gradle-versionand--distribution-typeobsolete, as the URL already contains this information. This option is valuable if you want to host the Gradle distribution inside your company’s network. The URL is validated before it is written to the properties file. --gradle-distribution-sha256-sum-

The SHA256 hash sum used for verifying the downloaded Gradle distribution.

--network-timeout-

The network timeout to use when downloading the Gradle distribution, in ms. The default value is

10000. --no-validate-url-

Disables the validation of the configured distribution URL.

--validate-url-

Enables the validation of the configured distribution URL. Enabled by default.

If the distribution URL is configured with --gradle-version or --gradle-distribution-url, the URL is validated by sending a HEAD request in the case of the https scheme or by checking the existence of the file in the case of the file scheme.

Let’s assume the following use-case to illustrate the use of the command line options.

You would like to generate the Wrapper with version 9.3.1 and use the -all distribution to enable your IDE to enable code-completion and being able to navigate to the Gradle source code.

The following command-line execution captures those requirements:

$ gradle wrapper --gradle-version 9.3.1 --distribution-type all> Task :wrapper BUILD SUCCESSFUL in 0s 1 actionable task: 1 executed

As a result, you can find the desired information (the generated distribution URL) in the Wrapper properties file:

distributionUrl=https\://services.gradle.org/distributions/gradle-9.3.1-all.zipLet’s have a look at the following project layout to illustrate the expected Wrapper files:

.

├── a-subproject

│ └── build.gradle.kts

├── settings.gradle.kts

├── gradle

│ └── wrapper

│ ├── gradle-wrapper.jar

│ └── gradle-wrapper.properties

├── gradlew

└── gradlew.bat.

├── a-subproject

│ └── build.gradle

├── settings.gradle

├── gradle

│ └── wrapper

│ ├── gradle-wrapper.jar

│ └── gradle-wrapper.properties

├── gradlew

└── gradlew.batA Gradle project typically provides a settings.gradle(.kts) file and one build.gradle(.kts) file for each subproject.

The Wrapper files live alongside in the gradle directory and the root directory of the project.

The following list explains their purpose:

gradle-wrapper.jar-

The Wrapper JAR file containing code for downloading the Gradle distribution.

gradle-wrapper.properties-

A properties file responsible for configuring the Wrapper runtime behavior e.g. the Gradle version compatible with this version. Note that more generic settings, like configuring the Wrapper to use a proxy, need to go into a different file.

gradlew,gradlew.bat-

A shell script and a Windows batch script for executing the build with the Wrapper.

You can go ahead and execute the build with the Wrapper without installing the Gradle runtime. If the project you are working on does not contain those Wrapper files, you will need to generate them.

2. Using the Gradle Wrapper

It is always recommended to execute a build with the Wrapper to ensure a reliable, controlled, and standardized execution of the build.

Using the Wrapper looks like running the build with a Gradle installation.

Depending on the operating system you either run gradlew or gradlew.bat instead of the gradle command.

The following console output demonstrates the use of the Wrapper on a Windows machine for a Java-based project:

$ gradlew.bat buildDownloading https://services.gradle.org/distributions/gradle-5.0-all.zip ..................................................................................... Unzipping C:\Documents and Settings\Claudia\.gradle\wrapper\dists\gradle-5.0-all\ac27o8rbd0ic8ih41or9l32mv\gradle-5.0-all.zip to C:\Documents and Settings\Claudia\.gradle\wrapper\dists\gradle-5.0-al\ac27o8rbd0ic8ih41or9l32mv Set executable permissions for: C:\Documents and Settings\Claudia\.gradle\wrapper\dists\gradle-5.0-all\ac27o8rbd0ic8ih41or9l32mv\gradle-5.0\bin\gradle BUILD SUCCESSFUL in 12s 1 actionable task: 1 executed

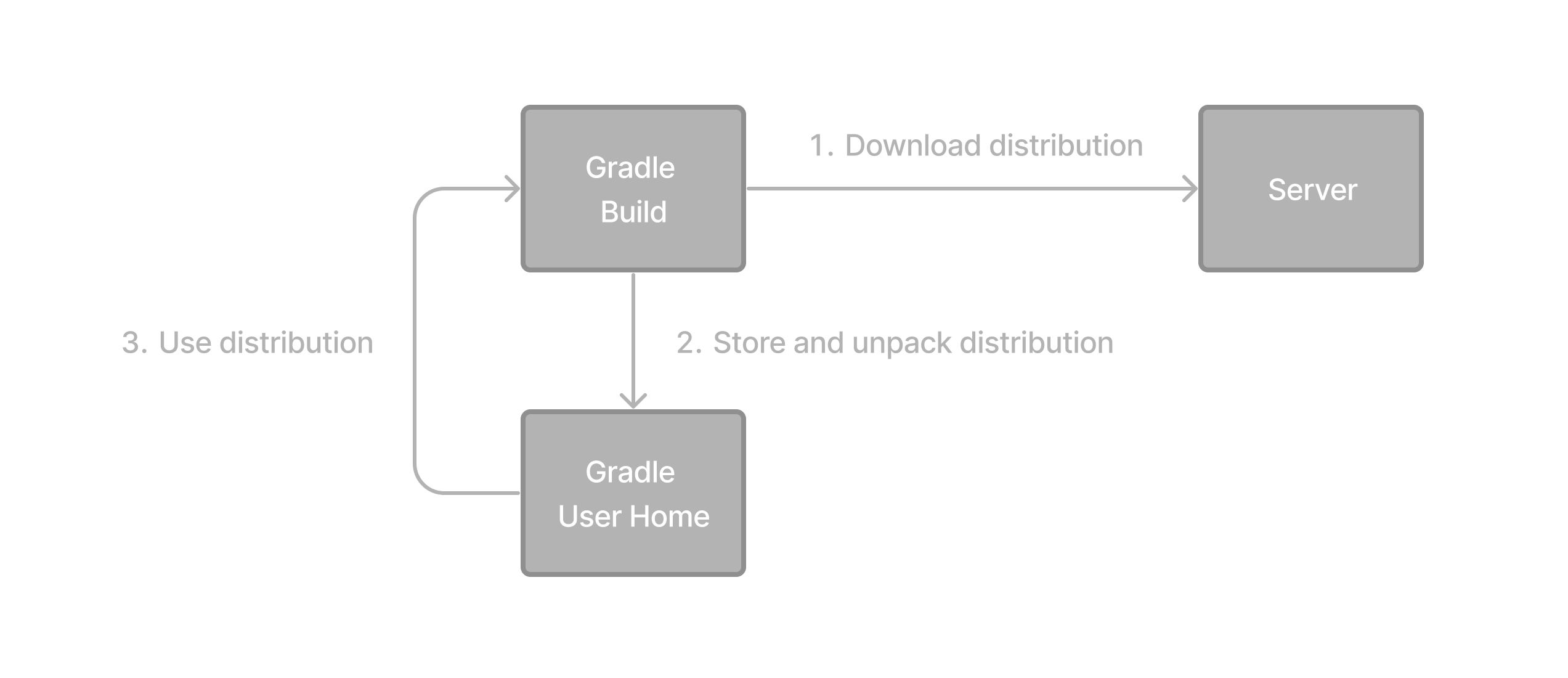

If the Gradle distribution was not provisioned to GRADLE_USER_HOME before, the Wrapper will download it and store it in GRADLE_USER_HOME.

Any subsequent build invocation will reuse the existing local distribution as long as the distribution URL in the Gradle properties doesn’t change.

|

Note

|

The Wrapper shell script and batch file reside in the root directory of a single or multi-project Gradle build. You will need to reference the correct path to those files in case you want to execute the build from a subproject directory e.g. ../../gradlew tasks.

|

3. Upgrading the Gradle Wrapper

Projects typically want to keep up with the times and upgrade their Gradle version to benefit from new features and improvements.

The recommended option is to run the wrapper task and provide the target Gradle version as described in Adding the Gradle Wrapper.

Using the wrapper task ensures that any optimizations made to the Wrapper shell script or batch file with that specific Gradle version are applied to the project.

As usual, you should commit the changes to the Wrapper files to version control.

Note that running the wrapper task once will update gradle-wrapper.properties only, but leave the wrapper itself in gradle-wrapper.jar untouched.

This is usually fine as new versions of Gradle can be run even with older wrapper files.

|

Note

|

If you want all the wrapper files to be completely up-to-date, you will need to run the wrapper task a second time.

|

The following command upgrades the Wrapper to the latest version:

$ ./gradlew wrapper --gradle-version latest // MacOs, Linux$ gradlew.bat wrapper --gradle-version latest // WindowsBUILD SUCCESSFUL in 4s 1 actionable task: 1 executed

The following command upgrades the Wrapper to a specific version:

$ ./gradlew wrapper --gradle-version 9.3.1 // MacOs, Linux$ gradlew.bat wrapper --gradle-version 9.3.1 // WindowsBUILD SUCCESSFUL in 4s 1 actionable task: 1 executed

Once you have upgraded the wrapper, you can check that it’s the version you expected by executing ./gradlew --version.

|

Tip

|

Don’t forget to run the wrapper task again to download the Gradle distribution binaries (if needed) and update the gradlew and gradlew.bat files.

|

Another way to upgrade the Gradle version is by manually changing the distributionUrl property in the Wrapper’s gradle-wrapper.properties file.

The tip above also applies in this case.

|

Note

|

Since Gradle 9.0.0, the version always uses the X.Y.Z format.

Using only the major or minor version is not supported in gradle-wrapper.properties.

|

Customizing the Gradle Wrapper

Most users of Gradle are happy with the default runtime behavior of the Wrapper. However, organizational policies, security constraints or personal preferences might require you to dive deeper into customizing the Wrapper.

Thankfully, the built-in wrapper task exposes numerous options to bend the runtime behavior to your needs.

Most configuration options are exposed by the underlying task type Wrapper.

Let’s assume you grew tired of defining the -all distribution type on the command line every time you upgrade the Wrapper.

You can save yourself some keyboard strokes by re-configuring the wrapper task.

tasks.wrapper {

distributionType = Wrapper.DistributionType.ALL

}tasks.named('wrapper') {

distributionType = Wrapper.DistributionType.ALL

}With the configuration in place, running ./gradlew wrapper --gradle-version 9.3.1 is enough to produce a distributionUrl value in the Wrapper properties file that will request the -all distribution:

distributionUrl=https\://services.gradle.org/distributions/gradle-9.3.1-all.zipCheck out the API documentation for a more detailed description of the available configuration options. You can also find various samples for configuring the Wrapper in the Gradle distribution.

Authenticated Gradle distribution download

The Gradle Wrapper can download Gradle distributions from servers using HTTP Basic Authentication.

This enables you to host the Gradle distribution on a private protected server.

You can specify a username and password in two different ways depending on your use case: as system properties or directly embedded in the distributionUrl.

Credentials in system properties take precedence over the ones embedded in distributionUrl.

|

Tip

|

HTTP Basic Authentication should only be used with |

System properties can be specified in the .gradle/gradle.properties file in the user’s home directory or by other means.

To specify the HTTP Basic Authentication credentials, add the following lines to the system properties file:

systemProp.gradle.wrapperUser=username

systemProp.gradle.wrapperPassword=passwordEmbedding credentials in the distributionUrl in the gradle/wrapper/gradle-wrapper.properties file also works.

Please note that this file is to be committed into your source control system.

|

Tip

|

Shared credentials embedded in distributionUrl should only be used in a controlled environment.

|

To specify the HTTP Basic Authentication credentials in distributionUrl, add the following line:

distributionUrl=https://username:password@somehost/path/to/gradle-distribution.zipThis can be used in conjunction with a proxy, authenticated or not.

See Accessing the web via a proxy for more information on how to configure the Wrapper to use a proxy.

Verification of downloaded Gradle distributions

The Gradle Wrapper allows for verification of the downloaded Gradle distribution via SHA-256 hash sum comparison. This increases security against targeted attacks by preventing a man-in-the-middle attacker from tampering with the downloaded Gradle distribution.

To enable this feature, download the .sha256 file associated with the Gradle distribution you want to verify.

Downloading the SHA-256 file

You can download the .sha256 file from the stable releases or release candidate and nightly releases.

The format of the file is a single line of text that is the SHA-256 hash of the corresponding zip file.

You can also reference the list of Gradle distribution checksums.

Configuring checksum verification

Add the downloaded (SHA-256 checksum) hash sum to gradle-wrapper.properties using the distributionSha256Sum property or use --gradle-distribution-sha256-sum on the command-line: